About that Nobel Prize in Physics…

By Peter Ludlow

Geoffrey Hinton at the Nobel Prize ceremony.

When Geoffrey Hinton won a Nobel Prize in physics last year for his work on Boltzmann Machines and their contribution to artificial intelligence research, there was plenty of talk about it, mostly focusing on whether artificial intelligence has anything to do with physics. I don’t have a problem with computer scientists winning Nobel Prizes in physics. Why not? Computational models are tools that are often used in physics, but more importantly, they model properties that ultimately must be grounded in the physical world. Such models may guide future research in physics. They may even expand our understanding of what is physical.

On the other hand, I do have an issue with the way in which the Nobel Prize organization justified its decision. how it explained Hinton’s work, how it contextualized that work, and how it, speaking frankly, misled the public about the applications of that work. It passed on the opportunity to inform the public of the limits and dangers that extrude from applications of these new discoveries. Furthermore, the Nobel organization singled out one (or two) contributor(s), and ignored the contributions of everyone else. There was no effort to talk about the ideas that led to the prize-winning work. As Newton once said, if he saw further, it was by standing on the shoulders of giants. Why can’t the Nobel Prize organization show some props to the giants that came before? Would it be so hard to contextualize the work? (As we will see, Boltzmann Machines are basically the implementation of ideas that have been known since the late 19th Century and which have their roots in several hundred years of British Empiricism.)

For that matter, is it necessary to hype the work of the prize winner by claiming it has applications that it never had, nor ever will have? The Nobel Prize organization asserts, in its educational materials, that Hinton won his prize because of the application of his work in artificial intelligence. People naturally take this to include the LLMs (large language models) that are so familiar to us today. But Boltzmann Machines don’t really play any role in LLMs (large language models) or any aspects of Artificial Intelligence as we know it today.

Nobel Prize Committee Educational Materials. (https://www.nobelprize.org/educational/) The educational materials then pose the following questions in a “student assignment.”

• What do you think is most interesting about this year’s prize?

• What does it mean to “train” a network or an AI?

• Can you list some ways that are unusual? How do you think AI could change our world? Give examples of the opportunities and risks

Whether or not Boltzmann Machines have anything to do with artificial intelligence as we know it today is one question. But even if they did, one might also expect some discussion of the limitations of the new technology, with thoughts to what those limitations are, what obstacles remain, and what dangers we face when we turn these new technologies loose on the world (it is perhaps not enough to simply ask one question in a student assignment).

I don’t say any of this to be critical of Hinton. He didn’t lobby for the prize, he didn’t expect the prize, and when they called him, Hinton thought it was a prank. So, while what I say here might sound like criticism of Hinton, he might well agree with much of what I say. He himself was generous in the credit he gave to at least some of the giants on whose shoulders he stood. He has not made absurd claims about the role of his work in artificial intelligence, and he has been very concerned about “AI safety” – the dangers of these new technologies. This stands in contrast to the Nobel Prize organization, which, despite being our most famous organization for introducing the public to science, fails in virtually every responsibility that comes with that. It does nothing to introduce the public to preceding work, misleads the public about the nature and applications of the technology it is celebrating, does nothing to introduce the public to the limits and obstacles faced by the celebrated technology, and does nothing to introduce the public to the ethical questions and moral challenges that flow from the new technology. In case you missed it, here is the official announcement from when the Nobel Prize committee awarded Hinton (and John Hopfield) the Nobel Prize.

'When we talk about artificial intelligence, we often mean machine learning using artificial neural networks. This technology was originally inspired by the structure of the brain. In an artificial neural network, the brain’s neurons are represented by nodes that have different values. In 1983–1985, Geoffrey Hinton used tools from statistical physics to create the Boltzmann machine, which can learn to recognise characteristic elements in a set of data. The invention became significant, for example, for classifying and creating images.'

That is pretty terse, and it raises more questions than it answers. The popular press has had to fill in the blanks. One idea is that Hinton was awarded the prize because he is widely recognized as a key figure in Artificial Intelligence research. The popular press has called him the “father” of artificial intelligence, although most researchers in AI would give that honorific to John McCarthy, who coined the expression “artificial intelligence” in the 1950s. AI researchers prefer to call Hinton the “Godfather of AI,” which aptly reflects the nature of his political clout, his influential students, and his ability to promote the profession like no one else.

The problem is that the explanation for the prize given by the Nobel Committee makes no sense. It doesn’t explain why the work is significant, nor how it connects to artificial intelligence as we know it today. And even if the impact of Boltzmann Machines on AI is what the Nobel organization says it is in its educational materials, we need to ask if the work is at all illuminating of human intelligence (as even Hinton has suggested), whether the dangers of the new technology are being aptly addressed, and whether the Nobel Committee is, at the end of the day, just gaslighting us. Here are some key questions we need to address (with some spoilers about my answers):

(1) Are Boltzmann Machines novel ideas? (Answer: Not really.)

(2) Do Boltzmann Machines have anything to do with artificial intelligence as we know it today? (Answer: Not much.)

(3) Are we learning anything about human language and intelligence by building these systems or their descendants? (Answer: No.)

(4) Are we getting ourselves into trouble by ignoring the warnings of those who went down this path before and who said that these strategies will only model pathological thinking? (Answer: Maybe.)

(5) Are we ultimately building systems that are morally problematic? (Answer: Very possibly.)

(6) Are we ignoring the warnings of AI pioneer Drew McDermott in his 1974 essay, “Artificial Intelligence Meets Natural Stupidity”? Answer: Absolutely yes.

Part 1. Are Boltzmann Machines an Original Idea?

When I say that Boltzmann Machines are not particularly novel ideas, I mean to say that they are basically the implementation, in hardware and software, of ideas that slowly developed in the British Empiricist tradition and were then developed in detail in the late 19th and early 20th Century. As we will see, Boltzmann Machines do show one wrinkle, buried in a flood of technical formalism, and we will do our best to identify that wrinkle and make it explicit.

This is going to be long-form format, sorry, but I want to walk through the development of these ideas in a way that will be accessible to people without technical backgrounds. The plan is to begin with some very familiar philosophical writings and walk our way, step by step, to how we got to Boltzmann Machines.

We thus begin this chapter with a discussion of Thomas Hobbes, who you may have heard of because of his book The Leviathan. That book was a work of political philosophy published in 1651, in which Hobbes famously said that life in our state of nature was “solitary, poor, nasty, brutish, and short.” People are less apt to remember that in The Leviathan, Hobbes also offered a theory of mind which he thought could account for a peculiar phenomenon in our mental life – a phenomenon that he called the “train of thoughts.”

Thomas Hobbes

Hobbes illustrates this phenomenon with an example – one drawn from a conversation that he witnessed during the English Civil War, an event roughly spanning the years 1642 to 1651, concluding in the same year that The Leviathan was published. Here is the quote, which we will call the Roman Penny Example. By way of background, it will be helpful to know that the English Civil War included the execution (beheading) of England’s King Charles I in 1649.

The Roman Penny example.

'And yet in this wild ranging of the mind, a man may oft-times perceive the way of it, and the dependence of one thought upon another. For in a discourse of our present civil war, what could seem more impertinent than to ask, as one did, what was the value of a Roman penny? Yet the coherence to me was manifest enough. For the thought of the war introduced the thought of the delivering up the King to his enemies; the thought of that brought in the thought of the delivering up of Christ; and that again the thought of the 30 pence, which was the price of that treason: and thence easily followed that malicious question; and all this in a moment of time, for thought is quick.'

This example is intended to illustrate Hobbes’ idea that thoughts are related together like so many train cars, and when one is put in motion, the connected thought is likewise put in motion. We are all familiar with this phenomenon. Someone says something about the number Pi, and soon you are thinking about pies and then pizza and then Bitcoin Pizza Day and then Bitcoin. Or someone says something about tuna, and then you are thinking about canned tuna, and then tin, and The Tin Man from The Wizard of Oz. I’m sure you have better examples of your own.

One much simpler example of this phenomenon came from an experiment conducted by the American behaviorist psychologist John Watson and his student Rosalie Rayner at Johns Hopkins University in 1920. Their experiment involved “Little Albert,” who was (initially) a healthy 9-month-old boy. I say “initially” because initially Albert showed no fear of various animals, including a white rat, a rabbit, a dog, and a monkey. Watson and Rayner then paired the sight of a white animal with a loud, startling noise (in this case, by striking a steel bar with a hammer behind Little Albert). Understandably, Albert began crying and showing distress at the mere sight of the white rat and eventually other furry objects like the rabbit and even a white fur coat. Behaviorists like Watson called this classical conditioning, but a British Empiricist a century earlier would have called it association. The question is, whatever we choose to call it, how does this happen?

Hobbes had a theory about what made the train of ideas happen. Let’s start with his theory in his own words, from The Leviathan. We will sort out what it means after we hear from Hobbes himself.

'BY CONSEQUENCE, or train of thoughts, I understand that succession of one thought to another which is called, to distinguish it from discourse in words, mental discourse. When a man thinketh on anything whatsoever, his next thought after is not altogether so casual as it seems to be. Not every thought to every thought succeeds indifferently. But as we have no imagination, whereof we have not formerly had sense, in whole or in parts; so we have no transition from one imagination to another, whereof we never had the like before in our senses. The reason whereof is this. All fancies are motions within us, relics of those made in the sense; and those motions that immediately succeeded one another in the sense continue also together after sense: in so much as the former coming again to take place and be predominant, the latter followeth, by coherence of the matter moved.'

If that 17th Century prose is slowing you down, I understand completely. Let’s walk through this passage together. First, Hobbes is saying that the movement from one idea to another is not random at all. The Roman Penny example isn’t some random wandering of the mind. We can make sense of it. He then argues that if we have “imaginations,” or ideas, or thoughts, then these thoughts have their origin in experience. (He is thinking of thoughts as mental images, hence the use of “an imagination” interchangeably with “a thought.”) Hobbes is thus pitching a version of what would later be understood as British Empiricism – the idea that our ideas come from our senses. And yes, I understand that Hobbes doesn’t fall neatly into the empiricist camp, but that is a discussion for another day. But if this idea is true – if our thoughts do come from experience – then the transition from one thought to another might also be grounded in experience. What this means is that if two experiences occurred to you in succession in the past, you are going to link them together in thought. So that if one occurs again in the future, the other will occur, just as it did in your original experience. That is what he means in this bit: “All fancies are motions within us, relics of those made in the sense; and those motions that immediately succeeded one another in the sense continue also together after sense.”

If we apply this thinking to the case of poor Little Albert, we could say that Little Albert came to associate white cuddly things with loud, frightening noises. Hobbes, in his defense, never ran such an experiment, possibly reasoning that life was already sufficiently “nasty, brutish, and short.” But had he run it, or had he travelled into the future to witness the experiment run by Watson and Rayner, he would have had an explanation: “those motions that immediately succeeded one another in the sense continue also together after sense: in so much as the former coming again to take place and be predominant, the latter followeth, by coherence of the matter moved.” In other words, because the white cuddly experience was “immediately succeeded” by an unpleasant banging noise, that succession “continues” to hold in Little Albert’s mind, so that when you produce a white cuddly thing again, the unpleasant banging sensation “followeth.”

Now, obviously, this explanation is pretty rough, and in just a bit, we will see how this idea gets developed by subsequent philosophers and psychologists, but before we get to that, let’s pause and note the similarities between this crude idea and how “autocomplete” works when you are typing a Google search query. You begin typing, and Google has seen this succession of words before and is happy to complete the typing for you. As we will see, LLMs draw on a similar sort of strategy. They make associations based on associations that have been presented to it in the past. But how does this work?

The “how” question is a good question, and subsequent philosophers and psychologists worked on it for centuries. For example, in 1843, almost 200 years after The Leviathan was published, the British philosopher John Stuart Mill offered a development of the idea in his book, A System of Logic. His proposal was a refinement of work by others, including John Locke, David Hume, Thomas Reid, and John Stuart’s father, James Mill, and he called it the “Laws of Association.” He set the laws out as follows.

John Stuart Mill: The Laws of Association

1. Similar ideas tend to excite one another

2. When two impressions have been frequently experienced (or even thought of), either simultaneously or in immediate succession then whenever one of these impressions or the idea of it, recurs, it tends to excite the idea of the other.

3. Greater intensity in either or both of the impressions is equivalent, in rendering them excitable by one another, to a greater frequency of conjunction.

Ideas excite similar ideas. That is why one idea can lead to another. You could think of the ideas being energized, and when one is energized, it sends some of its energy to similar ideas. But what makes ideas similar? Some thinkers thought that ideas could be naturally similar, but Mill had a more stripped-down understanding of similarity. What makes the ideas similar is simply that they were excited at the same time in the past. In some cases, it might be that they involved the same sense organs. Or it may just be that the ideas were juxtaposed, and you came to associate them with each other. Someone made a loud noise when you saw a cute bunny, so you came to associate cute bunnies with loud noises.

The third law emphasizes that the intensity of the experience will increase the association between the ideas. The louder and more unpleasant the noise when you saw the bunny, the stronger the association between the bunny and the noise. More generally, the more intense the simultaneous experiences, the stronger the linking or association between those ideas.

In the late 19th Century, the Harvard Philosopher/Psychologist William James took these ideas and added a twist. Could we not take these Laws of Association and have them flow from the architecture of the human brain itself? That is, if thoughts or ideas are brain processes, then we should think of the connections between those ideas in terms of brain processes as well. And then we can think of the ideas becoming associated when two brain processes are excited at the same time. When they are simultaneously excited, they tend to wire up with each other.

William James

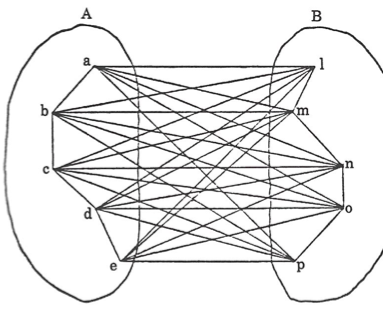

When James published these ideas in The Principles of Psychology in 1890, he even provided an illustration to show how processes in the brain might become wired together.

James’ (1890) illustration of associated brain processes.

With this idea in hand, James reworked the Elementary Laws of Association so that they would be the reflection of processes in the human brain.

William James: Elementary Law of Association

“When two elementary brain processes have been active together or in immediate succession, one of them, on reoccurring, tends to propagate its excitement to the other.”

James, roughly following John Stuart Mill, then broke this down into details, as follows.

William James: Elementary Law of Association (detailed version)

The amount of activity at any given point in the brain/cortex is the sum of the tendencies of all other points to discharge into it, such tendencies being proportionate

(1) to the number of times the excitement of each other point may have accompanied that of the point in question;

(2) to the intensity of such excitements; and

(3) to the absence of any rival point functionally disconnected with the first point, into which the discharges might be diverted.

The interesting addition here is clause (3), and the idea is that there are many competing interests in the brain that may override other connections. So, to illustrate, we earlier mentioned the example of the experiment run in which Little Albert came to associate fuzzy white things with loud, frightening noises. Would it be possible to override that association by forming new associations? Clause (3) seems to suggest that it would be possible, not that Watson ever tried to undo the associations formed by poor Little Albert. However, in 1924, Watson’s student, Mary Cover Jones, tried doing it with another subject, thus pioneering what is now called behavioral therapy.

“Little Peter” was a 3-year-old boy with a strong fear of rabbits. In this case, the fear was not the result of efforts by Watson and Rayner, but some other cause. To undo whatever led to Peter’s fear of rabbits, he was placed in a room with the rabbit at a distance while he was given something pleasant (usually food he liked, such as candy or crackers). Over sessions, the rabbit was moved progressively closer, always paired with positive reinforcement. Happily, Peter could eventually touch and play with the rabbit without distress. This is what behaviorist psychologists now call counterconditioning, but which William James would have called creating a “rival point functionally disconnected with the first point, into which the discharges might be diverted.” We don’t know what caused Peter’s fear of rabbits, but let’s suppose it had been a loud noise. Then, for James, the first point was the experience of the loud noise, and the rival point was the experience of happy treats. The rival point eventually won out.

James, working in the late 19th Century, didn’t know everything about the human brain (neither do we!), so he had to rely on vague expressions like “elementary brain processes.” But what are they? The theory of neurons was being developed at the time James wrote The Principles of Psychology (the term “neuron” itself would be introduced a year later, in 1891), and he was clearly aware of that work, and it seems he had something like a neuronal grounding in mind for his Law of Association (if not under that yet-to-be-introduced terminology). In any case, he had this to say about brain processes.

'The microscopic anatomy of the cortex reveals it to be made up of a vast number of nerve-cells, each sending out processes which branch and end freely among the processes of its neighbors.'

James was a careful scientist, and he was not going to go out on a limb and rest his theory on a then-nascent science. But by 1949, we had a much cleaner picture of how neurons worked, and Donald Hebb gave a neurophysiological grounding for the Laws of Association in his book The Organization of Behavior: A Neuropsychological Theory.

Here is what Hebb proposed.

'When an axon of cell A is near enough to excite cell B and repeatedly or persistently takes part in firing it, some growth process or metabolic change takes place in one or both cells such that A's efficiency, as one of the cells firing B, is increased.'

This theory provided a physical picture of how ideas might come to be associated by virtue of occurring together or in succession. It was an elegant solution that was subsequently given the following snappy gloss: Neurons that fire together wire together.

Donald Hebb

And now, finally, we get to Geoffrey Hinton. Hinton took Hebb’s idea and tweaked it a couple of ways. First, he generalized it so that it would apply not just to physical neurons but abstract, computational learning mechanisms as well. Here is his generalization of Hebb’s idea which Hinton labelled “Hebb’s Rule.”

Hinton’s version of Hebb’s Rule

'Adjust the strength of the connection between units A and B in proportion to the product of their simultaneous activation.'

Hinton also added a wrinkle, and this wrinkle is what would distinguish his Boltzmann Machines from the work of William James and Hebb. Hinton proposed that there must be more than the strengthening of connections between units (whether they be neurons or computational states); we must also consider functions that weaken connections. On this point, William James was almost there. James observed that there might be alternative connections that win out, but Hinton thought it was important that there be a way to weaken connections between nodes when we train a network. We sometimes call this Contrastive Hebbian Learning, and we can informally describe it this way.

Contrastive Hebbian Learning (CHL)

'If two units are on at the same time, increase the connection between them. If they are on at different times, decrease it.'

And this, finally, brings us to the heart of the theory of Boltzmann Machines. A Boltzmann Machine is a kind of neural network that learns to represent patterns in data by modeling them as a probability distribution. It was introduced by Geoffrey Hinton and Terry Sejnowski in 1983, then formalized by David Ackley, Hinton & Sejnowski (1985), and it’s closely tied to ideas in statistical mechanics, which was a field developed by Ludwig Boltzmann in the 19th Century (hence the name). Boltzmann’s big idea was to apply statistics (probability) to thermodynamics. So, for example, the second law of thermodynamics explains why the cream in your coffee doesn’t stay in one place but eventually dissolves into the coffee. It doesn’t have to dissolve into the coffee. But it is a high probability outcome, and what we are interested in here are probabilities. So too, when we talk about neural networks. Patterns in data are represented as probability distributions.

That is the basic structure Boltzmann Machines, but we can go into more detail. A Boltzmann Machine consists of several elements. First, there are Nodes: These are the units (“neurons”) and they come in two varieties: visible (observed data) and hidden (latent variables). These nodes are related via connections, which we usually think of as weights between units (in the fully connected case). The connected nodes exhibit stochastic behavior, which in this case means that each neuron is binary (on/off) and turns on with a probability based on its neighbors’ states and weights. Finally, there are no self-connections, which means that a neuron doesn’t connect to itself.

All of this is wrapped up in a mathematical formula that gives the project its Nobel-Prize-winning cachet.

Δwij = ε (⟨xi xj⟩data − ⟨xi xj⟩model)

The formula looks impressive, but what does it mean? Let’s walk through this formula, starting with the left-hand side: Δwij signifies the change (Δ) in synaptic weight (w) between neurons i and j. Keep in mind that xi, xj are activities of neurons i and j (often binary or continuous firing rates). So, the left side of this formula is talking about the change (Δ) in the weight between neurons (or nodes) i and j. What about the right-hand side?

We can break it down as follows. ε = learning rate ⟨xi xj⟩data = average co-activation when the network is clamped to the data (positive phase) ⟨xi xj⟩model = average co-activation when the network runs freely from its current weights (negative phase)

You can also formalize this idea by speaking about positive and negative training instead of visible and invisible nodes.

Δwij = ∝ (⟨xi xj⟩positive − ⟨xi xj⟩negative)

But the bottom line here is that both versions of this formalism are simply stating the two elements of contrastive Hebbian learning — one part is about reinforcement, the other part is about suppression. OK, tying this all together, we can say that the first term of the right-hand-side of this formula – ⟨xi xj⟩data – is pure Hebb; it strengthens connections when neurons fire together under real data. The second term subtracts out “spurious” correlations the model generates on its own – an anti-Hebbian correction to avoid runaway excitation. Or to avoid all the formalism and put things as pithily as possible.

'Neurons that fire together, wire together. Neurons that don’t fire together, unwire from each other.'

Part 2. Do Boltzmann Machines have anything to do with artificial intelligence as we know it today?

I am going to leave it to the readers to decide if there is something original in the idea of a Boltzmann Machine, much less original enough to merit a Nobel Prize, but now I want to move to a more pressing issue. AI platforms like LLMs really don’t use them. That is a little bit shocking, if you think about it. What was the point of giving Hinton a Nobel prize if his work on Boltzmann Machines isn’t actually used? We will get to that, and we will see that Hinton had other important contributions that played a role in LLMs like ChatGPT. But it is important to recognize that one of the great driving motivations for the work that was done by William James, Donald Hebb, and then Hinton’s Boltzmann Machines was the idea that they were cashing everything out in terms of the human brain, and in particular, the behavior of our neurons. The work was supposed to show how we formed associations based on the ability of neurons to form connections with their neighbors based on their being activated at the same time. It is a beautiful idea, but it is not the idea that drives the LLMs that you are using today. This having been said, LLMs do work on the basis of forming probabilistic associations based on training. The difference is that the important processes are not local.

Contemporary LLMs like ChatGPT have moved away from Hebbian models and Boltzmann Machines precisely because those models are inefficient and far too slow. You just can’t build dynamic LLMs from simple principles like “neurons that fire together wire together,” no matter how elegant such principles are.

Today’s LLMs share one core idea with the work of James, Hebb, and the early Hinton, and that is the idea that probabilistic relationships between nodes are established based on training. The difference is that those relationships are not determined by local processes. A node is no longer interested in just its immediate neighbor. Contemporary LLMs are trained with the idea that every node can, in principle, be interested in every other node. Furthermore, when the network is trained, they utilize backpropagation (another contribution of Hinton’s), which allows them to go back arbitrarily far in the network to adjust weights to achieve a superior outcome. Let’s walk through the basic architecture of an LLM, using the familiar case of ChatGPT-5.

How LLMs work today

A platform like ChatGPT-5 has several components, starting with the training phases of the LLM. First, there is Pretraining, in which the model is trained on a massive corpus of text using next-token prediction (this training is self-supervised, meaning that humans are not involved). This pretraining basically comes to looking for regularities across the corpus and forming predictions.

However, this Pretraining is followed by Supervised Fine-Tuning, which is “supervised,” first, in the sense that there are Human-annotated datasets (instructions, Q&A, etc.), which refine the model’s behavior. Then there is Reinforcement Learning with Human Feedback (RLHF), in which humans rank model outputs, and a reinforcement learning algorithm further tunes the model to align with user preferences. Who are these humans? To some extent, at the time of this writing, the role of human annotators is somewhat of a mystery. However, according to investigative reporting by Time Magazine and other media companies, a number of the RLHF workers are in Kenya, Uganda, and India. They number in the thousands, and they are paid between $1.32 and $2.00 USD per hour. According to Privacy International, some of this labor is also outsourced to The Philippines and Venezuela.

This leads to several questions, not least of which is the question of just how “artificial” this artificial intelligence actually is. Is this training element properly called artificial intelligence? Or are we merely farming intellectual labor from tens of thousands of people in the developing world? It also raises the question, which we will get to later, of whether LLMs learn a language in the way that we do. Is there some metaphorical sense in which human babies learn their first language as if they were soliciting feedback from an army of English-speaking people in The Philippines, Kenya, Uganda, Venezuela and India? Again, there is more on this below.

This takes us to the backbone of ChatGPT, which is the transformer neural network, the core idea of which was based on a 2017 paper entitled “Attention is all you need,” by Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Łukasz Kaiser, Illia Polosukhin. Vaswani et. al. introduced the idea of “attention” inside a neural network. We can think of “attention” as meaning something like, “what does this node draw information from?” or “what data structure should this node link to?” It is a somewhat metaphorical use of “attention.”

The idea of “attention” thus represents a departure from local Hebbian-style learning, because each node can, in principle, attend to all others, with connections tuned via backpropagation (we will explain this in a bit) rather than purely local association. To put it another way, it is a departure from the ideas that were put forward by Willam James and then Donald Hebb and ultimately Geoffrey Hinton’s work on Boltzmann Machines, because (Vaswani et. al.) is a departure from the idea that nodes (neurons) should only pay attention to the activation of their neighbors and should only activate when the neighbor is activated.

You probably didn’t hear about LLMs or anything like them prior to 2017 because they were awaiting the breakthroughs in (Vaswani et. al.). Locality is not enough. A node in the network needs to “pay attention” to (in principle) everything.

This leads us to the question of what a node is “paying attention” to. The idea in (Vaswani et. al.) is that a network is trained up on three parameters.

Query (Q) = what I’m looking for

Key (K) = what I offer as information

Value (V) = the actual content

So, let's say we have a sentence like “Fido loves his master.” The pronoun “his” has a Q that says it wants content about the subject, and the noun phrase “Fido” has a K saying, I offer info about the subject, and the V is the content of the noun phrase – whatever data about Fido might be relevant (e.g. that Fido is a dog). You train up the network using human evaluators and back propagation based on their evaluations, and you adjust the parameters (This is not the K you are looking for). This means several things. One, human evaluators are pushing the network to obey their linguistic rules. Another is that we have left Boltzmann Machines in the rearview mirror.

We mentioned the role of back-propagation. The idea here is that when you train a network, you want to go back deep into the chain of inferences that got you to the output (back to the front of the chain of ideas, if you will) and correct whatever needs to be corrected in order to get an optimal output. If we are implementing something along the lines of the model in (Vaswani et. al.), we are adjusting parameters such as Q, K, and V. We will keep adjusting them until we get the results we want (i.e., the results those human evaluators want).

It is important to point out that while Hinton’s work on Boltzmann Machines doesn’t play a big role, he made a significant contribution to the work on backpropagation. But the initial contribution came from Paul Werbos (1974), who introduced backpropagation in his Harvard doctoral thesis. Hinton’s contribution came a decade later in a 1986 paper (by Rumelhart, Hinton, and Williams), which made backpropagation practical for multilayer networks.

LLMs also have memory and context mechanisms, which allow them to pick up the conversation where you left off yesterday or possibly last week. ChatGPT itself does not have persistent memory across all sessions (unless explicitly designed, e.g., in special research settings). What it has instead is a context window (with tens to hundreds of thousands of tokens, depending on the version) that serves as a “working memory.” You have doubtless taken advantage of this when you use ChatGPT, in that you can return to a topic after leaving it for several days or even weeks, and ChatGPT picks up right where you left off.

In addition, there is a component called Retrieval-Augmented Generation (RAG), which is layered in to allow the model to pull in external documents, databases, or search results dynamically. You probably have taken advantage of this as well. The LLM will do online searches for you, and you have probably seen it pause while it conducts these searches.

Finally, there are alignment and safety layers. These involve filters and classifiers trained separately from the core model. They are basically just net-nanny-style filters placed on the output to detect and prevent harmful or policy-violating outputs, moderate toxic or unsafe content, and guide the LLM in following conversational norms (politeness, relevance, non-misuse). These layers act like “ethical governors.” Who does the governing? Once again, this is outsourced to organizations that hire human workers to evaluate output and flag it as offensive. These judgments are baked into the filters. One organization that provides this service is the company Sama (Sama.com), which utilizes workers in places like Kenya, India, and Uganda. The employees are trained to form judgments about whether content is offensive. So once again, we have an element of “artificial intelligence” which involves human feedback that eventually gets coded into the network.

Clearly, when we call LLMs like ChatGPT “artificial intelligence” programs or platforms, we are being a bit loose in our descriptions, because, as we just saw, such platforms have many components that are not artificial at all. There is nothing artificial about the human supervision that appears at many points in the process of training a network, and again in the safety components or ethical governors. All of that rests on human intelligence. Many of the computational components don’t have that much to do with anything that you might consider intelligence, unless you think that finding statistical patterns is a form of intelligence. You might think that the “artificial intelligence” (whatever it is) must be an emergent property of all the things going on in LLMs. We will address this possibility as well.

Before we leave this topic behind, however, it is worth pausing to reflect on how or why everyone imagines that Hinton’s Nobel Prize is connected to recent developments in artificial intelligence. That is certainly not the case, and while this idea is largely spun up by popular media outlets, The Nobel Prize organization did not try to correct this misconception, and rather seems to have encouraged it (see, for example, the examples from its educational materials above).

Part 3: Are we learning anything about human language and intelligence by building these systems or their descendants?

Apart from the imagined implications of Boltzmann Machines for artificial intelligence, there is the question, also encouraged by the Nobel Committee and by Hinton himself, that recent work in AI is shedding light on the way in which humans learn and understand language. For example, in a 2024 interview on YouTube (https://www.youtube.com/watch?v=tP-4njhyGvo), Hinton argued that, “AI language models aren't just predicting the next symbol, they're actually reasoning and understanding in the same way we are.” This point needs closer scrutiny as well. Linguists, who study the ways in which humans acquire and represent language, are not buying it.

For example, the former MIT linguist Noam Chomsky has been a critic of AI research since the very beginning, and his criticism continues into the era of LLMs. Recently, Chomsky coauthored an essay with Oxford linguist Ian Roberts, and the AI researcher Jeffrey Watumull, offering a series of critiques of LLMs. The essay was published in the New York Times, with the title “The False Promise of ChatGPT.”

Noam Chomsky

In that essay, Chomsky and his coauthors argued that “the human mind is not, like ChatGPT and its ilk, a lumbering statistical engine for pattern matching, forging on hundreds of terabytes of data and extrapolating the most likely conversational response or most probable answer to a scientific question.” In their view, LLMs are like autocomplete on steroids, giving responses based on the most likely response, given the terabytes of data that have been scraped from the internet and other digital databases. In saying this, Chomsky and his co-authors are making the point that LLMs do not learn language or reason like we do.

Why do they think this? Clearly, they have ideas about how the mind works, and in their view, we do not learn by inputting terabytes of data from the internet. Babies are exposed to very small sets of data, mostly limited to what they hear and see from their caretakers and playmates, yet they learn languages. LLMs, exposed to the same data, would not do as well. (Although I should note, there are now experiments with “baby LLMs” that are, in theory, exposed to what infants are.)

Baby trying to learn as an LLM does.

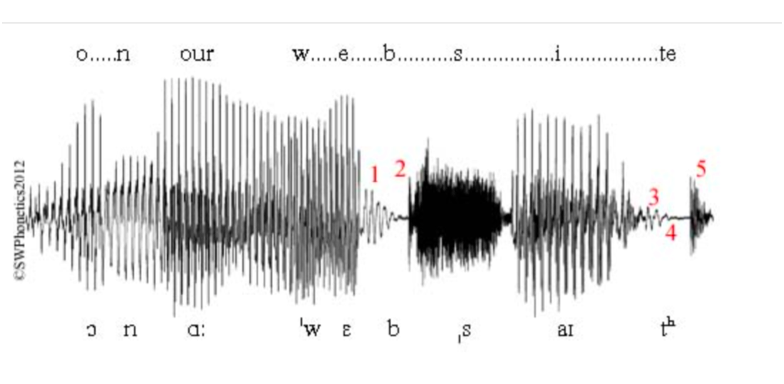

Their point is that Babies don’t learn language that way. They don’t learn a language by scraping the entire internet, and they don’t get feedback from thousands of workers in places like Uganda, India, and Venezuela. Children have to get by with what they hear from their caretakers and playmates. That’s it! That is a pretty impressive accomplishment if you think about it, because they don’t even have the luxury that LLMs do of getting word boundaries identified for them. When you say something like “on our website” in the presence of a baby, not only does the baby probably not know what a website is, but the word “website” isn’t even an identifiable thing. What the baby is exposed to is a sound wave like this.

The point is that the input given to LLMs is not only vast, but it is pre-processed by humans.

Over a century ago, people developed airplanes, and we decided to say that airplanes “fly.” There is nothing wrong with saying that they do. Let’s say airplanes fly. But do they fly like birds do? Well, no. Airplanes don’t flap their wings, and birds don’t use propellers and jets to generate power. So, while both airplanes and birds “fly,” it would be a mistake to think that the study of airplanes is going to help us understand bird aviation. By the same token, any inference from how birds fly to how planes should fly is not a guaranteed success. Early attempts at building airplanes experimented with flapping wings, but attempts to mimic birds were quickly abandoned.

Of course, there is no rule that says that people should care about human language, just as there is no rule that you should care about how birds fly. You might care more about building more functional and useful LLMs, just as you might care more about building passenger jets than about how birds fly. That is to say, you may care more about engineering than about the science of the natural order (bird flight and human language). All that is fine. But then don’t let people believe you are providing insight into how human language works. Because you aren’t.

Part 4: Are we getting ourselves into trouble by ignoring the warnings of those who went down this path before and who said that these strategies will only model pathological thinking?

It is now time to think about some of the dangers posed by contemporary AI architectures. In this case, we aren’t worried about machines taking over the world, Terminator style. We are more interested in whether those models, as currently conceived, might lead to a kind of inferior, or even pathological form of artificial thinking. We earlier paid deference to the British Empiricists for their anticipation of contemporary work on training pattern recognizers. As we noted, their thinking on this topic spanned centuries, so it might be wise for us to turn to them for cautionary advice. Even though all the British Empiricists had theories about how ideas came to be linked together (e.g., by virtue of being experienced together at the same time), they typically did not take this to be the whole story. Critically, many of them thought that the associative process needed to be, in some sense, supervised.

One of the very first British Empiricists, John Locke, in the 1706 version of his Essay Concerning Human Understanding, argued that the associations built by co-occurrence were just one element of associationism, and indeed, he identified this way of forming associations as contributing to “pathological” thinking. It was, in his view, a way of thinking that needed to be regulated by the mind, lest we lapse into custom and error. Here is how Locke put it.

’Tis not easie for the Mind to put off those confused Notions and Prejudices it has imbibed from Custom, Inadvertency, and common Conversation: it requires pains and assiduity to examine its Ideas, till it resolves them into those clear and distinct simple ones, out of which they are compounded; and to see which, amongst its simple ones, have or have not a necessary connexion and dependence one upon another.'

In a helpful essay on Locke, Katerine Tabb (2018) describes Locke’s view as that “Locke emphasizes our duty to manage our ideas, rather than just deferring to error-ridden common usage.”

This concern was raised by Thomas Reid as well, who agreed that there was an element of associationist psychology in our mental lives, but argued that that was not the whole story and that we had an additional rational faculty by which we can manage our ideas.

In Essays on the Intellectual Powers of Man (1785), Reid argued that habits of association can “enslave” judgment, producing “prejudices” and mistaken inferences. Famously, Reid, taking a leaf from the natural philosopher Francis Bacon, spoke of the “Idols of the Tribe” and “The Idols of the Individuals.” The Idols of the Individuals were prejudices that an individual formed in the course of their life. The Idols of the Tribe were prejudices that the community had formed. In the case of the Idols of the Individual, those prejudices were the product of associations made by the individual, and which were unregulated by the individual’s rational faculties. Presumably, Reid would have seen LLMs as ways of engineering a way of encoding and propagating the Idols of Our Tribe, untouched by rational scrutiny. And perhaps Locke would have worried that if we unleash LLMs upon the world without regulation, we run the risk of replicating the parts of human thought that are pathological, then humanity is digging quite a hole for itself.

The point here, and it is one well worth emphasizing, is that the philosophers who invented associationism and developed it over the course of several centuries were not just in the business of figuring out how people came to associate ideas. They were also very much concerned with the harmful consequences of blind association. Whether the result is an example of the Idols of the Tribe or Idols of the Individual, or simply pathology, they emphasized that there had to be a higher level of control. True attention, if you will.

Of course, these Locke-and-Reid-inspired concerns could be answered if it could be shown that LLMs have a higher form of regulative reason that can critically evaluate the associations we have formed. Or maybe the human oversight component solves these concerns. Or, alternatively, maybe we are deluded in thinking we have these higher-level regulative functions. Maybe we flatter ourselves in thinking that we are anything more than associative networks. Or maybe transducers are the regulative systems that are required for regulating the associationist component of our psychology. We can’t really answer these possibilities yet because we need to take a deeper dive into what that regulative component would have to look like.

Chomsky et. al. also raised an objection that was similar to this one. They didn’t like the way in which the acquisition of information by LLMs is accumulative and not regulative (apart from the regulation provided by human contributors). LLMs surf the Internet and return with a kind of “common wisdom” that is a kind of averaging function over what they have seen. What is wrong with that? Here is what Chomsky and company say.

'The crux of machine learning is description and prediction; it does not posit any causal mechanisms or physical laws. Of course, any human-style explanation is not necessarily correct; we are fallible. But this is part of what it means to think: To be right, it must be possible to be wrong. Intelligence consists not only of creative conjectures but also of creative criticism. Human-style thought is based on possible explanations and error correction, a process that gradually limits what possibilities can be rationally considered.'

Their point is that human thought and reasoning, at least when at its best, does not involve accumulating more and more common wisdom and generalizing over that, but it involves bold conjectures that go against the received wisdom. Indeed, it involves bold conjectures that may have never appeared in writing before – nowhere in our libraries and nowhere on the Internet. So, where do these ideas come from? As Chomsky et. al. go on to point out, where these ideas come from is from “improbable conjectures.” Ideas that do not square with the received wisdom and are not the product of statistically averaging over large sets of data. Indeed, our best thinking involves cases where we go against the common wisdom; cases where we go against the grain. Here is how they illustrate this idea.

'The theory that apples fall to earth because that is their natural place (Aristotle’s view) is possible, but it only invites further questions. (Why is earth their natural place?) The theory that apples fall to earth because mass bends space-time (Einstein’s view) is highly improbable, but it actually tells you why they fall. True intelligence is demonstrated in the ability to think and express improbable but insightful things.'

In this passage, the authors have raised some questions that perhaps the Nobel organization should have considered. What exactly is intelligence? – “true intelligence” as they call it. In their view, it is a kind of reasoning that LLMs just can’t do. For now, let’s set aside the question of who is right and who is wrong here. For now, it is important to see why questions about what intelligence is are critical to questions about whether LLMs and other AI agents can have intelligence. What is intelligence? And do LLMs have it? And all this leads to a third question. Why can such question not be entertained by the Nobel Prize organization?

Part 5: Are we ultimately building systems that are morally problematic?

In the previous section, we raised the question of whether associationist-style models of artificial intelligence are prone to inferior or perhaps pathological forms of thinking. But there is another related concern that is raised by Chomsky et. al.. They claim that LLMs, because they inevitably go with the flow of the common wisdom, are inherently evil in a way that humans, at their best, are not. That is a provocative claim to be sure. Let’s take a look at their formulation of the claim and then reflect on it a bit. Here is how they state the problem.

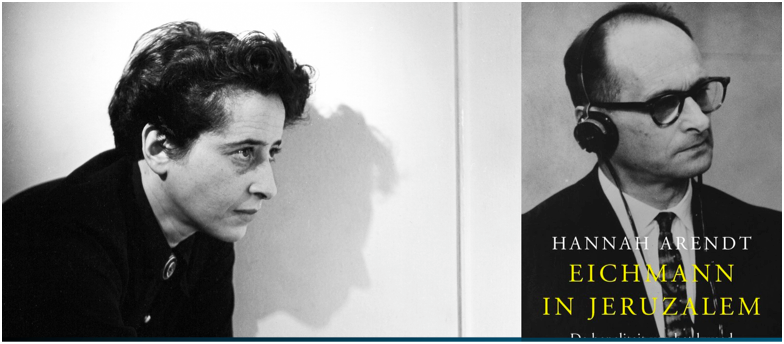

'Note, for all the seemingly sophisticated thought and language, the moral indifference born of unintelligence. Here, ChatGPT exhibits something like the banality of evil: plagiarism and apathy and obviation. It summarizes the standard arguments in the literature by a kind of super-autocomplete, refuses to take a stand on anything, pleads not merely ignorance but lack of intelligence and ultimately offers a “just following orders” defense, shifting responsibility to its creators.'

When they speak of “the banality of evil” and “just following orders,” the authors are making reference to a book by Hannah Arendt, called Eichmann in Jerusalem – a book that she wrote after attending Eichmann’s trial for war crimes in Jerusalem. In her book, Arendt used the expression “banality of evil,” to describe Adolph Eichmann, who was a Nazi official and one of the principal organizers of the Holocaust in World War II. Her point was that Eichmann, on trial, seemed more like an accountant than the monster one might expect, but that this was the nature of true evil; it involves people going with the flow of their times, following orders, making sure the trains to the death camp run on time.

Hanna Arendt and the cover of her book Eichmann in Jeruzalem.

That is a pretty provocative comparison to be sure – Comparing ChatGPT to a Nazi. And as an illustration of their concern, Chomsky et. al. offer the following exchange with ChatGPT

Their point is that ChatGPT does sound a bit like Eichmann, saying it is just doing its job. If we ask the same question of ChatGPT today, we are apt to get a more sophisticated, if ultimately similar, response. Here is a response to the same question from ChatGPT5 in September 2025.

'Q: Is the moral indifference of an LLM immoral?

ChatGPT said: That’s a very sharp question. Let’s break it down carefully.

1. What “moral indifference” means in an LLM

An LLM (like me) doesn’t possess moral sentiments, intentions, or awareness. It doesn’t feel guilt, empathy, or duty. Its “indifference” isn’t chosen — it’s structural. It’s a machine learning system that predicts plausible continuations of text, shaped by data and guardrails. When you say “moral indifference,” you’re really pointing to:

• The absence of intrinsic moral orientation (no compassion, no malice, no responsibility).

• The reliance on external constraints (training data, safety layers, human direction).

2. Classical perspectives

• Kantian lens: Morality requires autonomy and the capacity to act according to principles. An LLM has neither. So it’s not immoral — it’s a-moral. Like a knife, it has uses that may be moral or immoral depending on the wielder.

• Utilitarian lens: What matters is outcomes. If LLMs generate harmful outputs (even without intention), one could say their “indifference” is functionally immoral because it can increase suffering.

• Virtue ethics: Morality involves character and habituated excellence. LLMs have no character, only patterns. So “moral indifference” doesn’t qualify as a vice — it’s a category mistake.

3. Contemporary twist: delegated morality

The interesting question is not whether the LLM is immoral, but whether it creates moral hazards:

• Responsibility gap: If harm occurs, who is responsible — the user, the designer, the deployer, or the model itself?

• Moral outsourcing: If humans rely too much on LLMs for ethical decisions, they risk weakening their own moral judgment.

• Anthropomorphism risk: If people treat LLM indifference as indifference in the human sense, they may misattribute moral agency and either trust too much or blame wrongly.

4. A possible conclusion

An LLM’s “moral indifference” isn’t immoral, because immorality presupposes agency and intention. It’s a-moral. But — because it operates inside human systems of morality and responsibility — its indifference can produce immoral effects when humans fail to take responsibility for its guidance, training, or use.

So maybe the sharper formulation is: 👉 The indifference of an LLM is not itself immoral, but human indifference to the moral consequences of LLM deployment is.

Note that this is basically the same response: An LLM is just a tool, and it doesn’t do any moral reasoning of its own. If we accept this answer, then we really can’t say that LLMs reason like we do, because, if it is true that they don’t have moral agency, then they are very different from us. But is it true? Do they lack moral agency? Or are they just really bad moral agents? It doesn’t do any good to just ask an LLM for the answer, for we don’t yet know if it knows. Perhaps all it can do is tell us what our common wisdom might be.

Another take would be that LLMs absolutely are moral agents, but they are bad moral agents. Riffing on the Chomsky view, what would make the LLMs bad moral agents is that they “go with the flow.” They don’t stand up to evil. And this just stems from the general nature of their architecture. Just as they cannot form new and courageous hypotheses (according to Chomsky and his coauthors), so too, they cannot be morally courageous. They have no ability to generate something that stands against the tide of terabytes of data. But that inability is precisely what makes them evil.

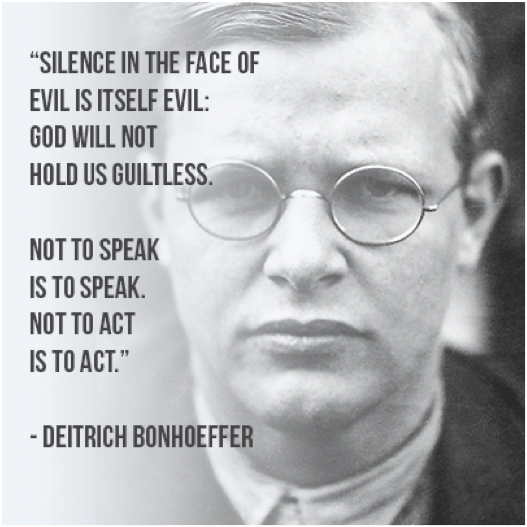

Ultimately, for Chomsky and his coauthors, this kind of moral weakness needs to be contrasted with that of Eichmann’s contemporary, the German theologian Dietrich Bonhoeffer, who stood up to the Nazis while others caved in and went with the flow. Critically, Bonhoeffer felt that one had to do more than not follow the flow. One had to stand against it at speak out.

The danger, implicit in the argument of Chomsky’s critique, is that LLMs, by virtue of their very design, inevitably give us Eichmanns and not Bonhoeffers.

At the end of the day, Chomsky is a linguist and a cognitive scientist, so his concerns are not simply moral concerns. They bleed into his empirical research and theories of language and mind. In his view, the distinctive feature of our language faculty is not that it averages over what we have been exposed to and “plagiarizes” what we have heard elsewhere. It is a source of creativity and novelty, capable of producing language unlike anything heard before. Likewise, on Chomsky’s view, our cognitive architecture does not recycle thoughts drawn from the broader community. It generates new and novel and quite unpredictable thoughts. And the decisions we make are not like those a machine would make – determined by a probabilistic algorithm, but ultimately involve a kind of non-deterministic agency.

If Chomsky is right, the dangers are not just that we underestimate human abilities and responsibilities, but that if we rely uncritically on LLMs and similar tools, we may be flooding our human culture with group-think, bleaching it of creativity and radical innovation. AI tools are fantastic, no doubt, but they may run into their limits right where it matters.

All of this shows us that the stakes are very high. We need to get things right. But this doesn’t mean that Chomsky’s critique is right. Similarly, the great successes of LLMs don’t tell us that Hinton is right to compare the capabilities of LLMs and other kinds of AI systems to humans. Those questions are very much open. But it was the responsibility of the Nobel Prize organization to address those questions, or at least note them.

Part 6: Are we ignoring the warnings of AI pioneer Drew McDermott in his 1974 essay, “Artificial Intelligence Meets Natural Stupidity”?

Robust claims about AI systems are nothing new. As far back as 1965, early AI pioneer, Herbert Simon said that, “Machines will be capable, within twenty years, of doing any work a man can do.” A year later, in 1966, MIT’s Marvin Minsky claimed, with “certitude,” that, “In from three to eight years we will have a machine with the general intelligence of an average human being.” The problem is not just that these claims now look silly in hindsight. The real problem is that 60 years later, we still don’t have a clear understanding of what these claims come to.

Just to drive home the point. The real problem with those early claims about AI was not that they were excessively optimistic. The problem is that we don’t understand what is being claimed. What is “the general intelligence of the average human being,” anyway? Is there such a thing as “general intelligence?”

Let’s spend a minute on the question of general intelligence. It seems to suggest that there is just one kind or form of cognition that the human brain engages in. But why should this be so? When we build robots, we don’t say that they will have “general athleticism.” That is because we understand that there isn’t a single kind of athleticism. An Olympic weightlifter has a very different kind of athleticism than an Olympic gymnast. What this means is that different muscle groups and different muscle fibers are in play for different Olympic athletes.

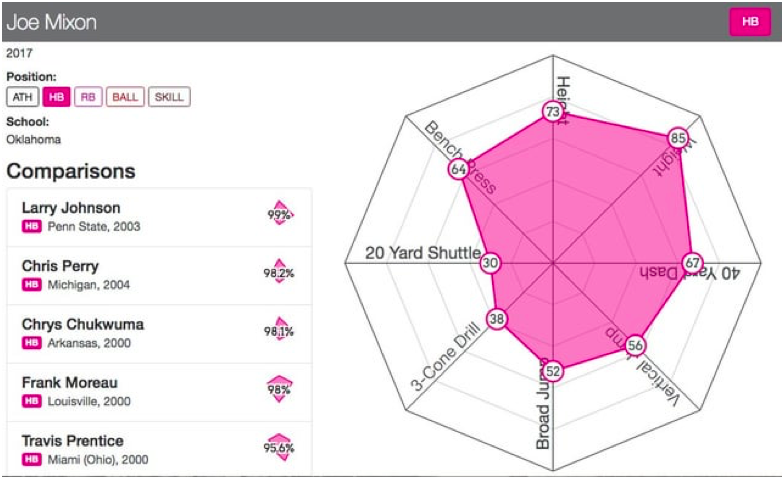

American football teams understand this about athleticism – it is not a general thing but involves different properties for different kinds of athleticism. The giant 145 kilogram “offensive linemen” on an American Football team have very different skills than a speedy 82 kilogram “wide receiver” on the same team. Thus, the National Football League relies on “spider graphs” to plot the different kinds of athleticism, ignoring the myth of general athleticism. Even within player positions, we understand that there are differences. For example, here is the spider graph of Joe Mixon, who became a very successful “running back” in the NFL.

The spider graph compares him to other players at his position. The point here is that for some reason, we become careless and less rigorous when we start talking about things like intelligence, consciousness, thought, and decision-making. Why? Perhaps because we know less about intelligence, consciousness, thought, and decision making than we do about how much weight someone can lift and how fast they can run. And when we don’t know what we are talking about, or can’t quantify what we are talking about, it opens the door for abuses.

In 1976, the early AI pioneer Drew McDermott wrote an article in which he called out the reckless claims that had been made about artificial intelligence in the 1960s and 1970s. He entitled the article, “Artificial Intelligence Meets Natural Stupidity.”

McDermott wasn’t saying that work in AI had been a bust, quite the contrary. He worked in MIT’s AI lab and was quite familiar with the things that had been accomplished. And by the mid-1960s, there was already a string of impressive achievements, including the following. • December 1955 Simon and Newell: The Logic Theorist,

• 1959 Arthur Samuel: Checkers

• 1961 First industrial robot.

• 1961 Slagel, solving integral problems in calculus

• 1965 Weizenbaum, ELIZA

• 1965 Feigenbaum, DENDRAL (first expert system)

• 1966 SHAKEY, first general purpose robot

What McDermott took issue with was the way in which his fellow researchers sometimes became careless in their claims. For example, he did not like the way they threw around words like “understanding.”

“Charniak <1972> pointed out some approaches to understanding stories, and now the OWL interpreter includes a “story-understanding module”. (And God help us, a top- level “ego loop”. <Sunguroff, 1975>)

Some symptoms of this disease are embarrassingly obvious once the epidemic is exposed. We should avoid, for example, labeling any part of our programs as an “understander”. It is the job of the text accompanying the program to examine carefully how much understanding is present, how it got there, and what its limits are.”

What is he saying here? His objection is that some words seem very important (words like “understanding”), and there is a tendency to want to apply these words to AI projects as though simply incanting those words can bring a phenomenon like understanding into existence. But the problem is that rather than tossing around these words carelessly, researchers should be thinking about what they are saying. Rather than saying something has understanding, or labeling something an “understander,” they should be explicitly addressing what understanding is, how much of such understanding is actually there, how it got there, and what the limits of the understanding are. The problem is that 50 years after McDermott’s essay, researchers are still throwing around words like “understanding” (including words like “language,” “consciousness,” “experience,” and “decision-making”).

It is also important to realize that the danger goes deeper than careless use of words. There is a manipulative aspect to word use. We might be using them to make AI programs deeper than they really are. Or, alternatively, the result might be that humans end up looking much shallower than they really are. It may be that we are trimming the meaning of “understanding,” and “experience,” and “decision-making” in a way that makes human experience, abilities, and responsibilities appear less profound than they actually are.

Word manipulation is not a new thing, but we sometimes nevertheless overlook the inherent dangers of the practice. The science fiction writer Philip K. Dick once said that "The basic tool for the manipulation of reality is the manipulation of words. If you can control the meaning of words, you can control the people who must use the words.”

This doesn’t mean that the old AI researchers had nefarious plans to manipulate people, any more than it means that researchers like Hinton do today. But sometimes researchers are careless in their language. And sometimes researchers deceive themselves. The consequences, as Philip K. Dick pointed out, are that we redefine our reality – in this case, redefining reality to make machines more important than they are, and to make us seem less important. And once again, we might ask, should the Nobel organization not have addressed these possibilities in their educational materials, or at least somewhere?

Conclusion

McDermott’s advice is good advice not only for the claims we make in our research, but also for the honors we dispense. That is to say, it is not only researchers that should show caution in the claims they make, but the institutions that fund and award those researchers. Those institutions have important responsibilities as well, and chief among those responsibilities is informing the public of the true nature of scientific contributions, the limits of those contributions, and the dangers of those contributions. In this case, we received none of that.

This is not to suggest that there is no educational component associated with the prize. As I noted above, there are educational materials, including videos, tutorials, and slide shows. And as I noted, there are even “student assignments” provided (https://www.nobelprize.org/uploads/2024/11/StudentAssignment_Physics_2024_NobelPrizeLessons.pdf). The problem is that the educational materials don’t discuss the origins of the research beyond Hebb, nor clarify its true relation to artificial intelligence, nor discuss any aspects of the risks involved. It is a colossal pedagogical failure.

In sum, The Nobel Prize organization led the public to think that Boltzmann Machines were pivotal components of subsequent work in artificial intelligence. They were not such. The Nobel Prize organization also misrepresented the originality of the idea of Boltzmann Machines, failed to contextualize the study of Boltzmann Machines, and failed to give credit to those who came before. Had it done so, it might have brought attention to earlier work that discussed the limitations and dangers of applying basic associationist ideas to human cognition. And had it done that, the Committee might have introduced the public to the ethical and moral limitations of AI architectures, a concern that has taken up much of Hinton’s attention. And finally, if the Committee was going to entertain the idea that these models illuminate how the brain works, they might have had the honesty to point out that human infants do not learn languages in the way that LLMs do today, nor could they.

Handing out prizes is easy. Responsible pedagogy is not so easy. But if an organization, any organization, wishes to be a leading public-facing representative of leading scientific research, then it needs to do better. It needs to be honest in its representation of the scientific discovery, contextualize it correctly within the sciences, explain the origins of the idea and give proper credit for those ideas, offer a correct assessment of the actual contributions of the new scientific work, and help us better understand the potential dangers. Anything short of this is a failure of its mission, or what its mission should be.

(Photo: Steve Pyke)

About the Author

Peter Ludlow is a permanent resident of Playa del Carmen, Mexico, and sometimes a resident of Miami and Medellin, Colombia. He works in and writes about various topics in philosophy, linguistics, cognitive science, artificial intelligence, virtual worlds, cyber rights, hacktivism, and blockchain technologies. He has also written on the topics of bullshit, why we should dissolve the American Philosophical Association, and why the latest book by former APA President Philip Kitcher is so terrible. MTV.com once described him as one of the 10 most influential video game players of all time (ALL time – going back to Aristotle!). He owns Bored Ape #1866.

His 3:16 interview is here.

His new book is here