Wise Pencils and Wandering Significance

Interview by Richard Marshall

'One of the most salient features of our improving intellectual economy lies in the fact that “our pencils often prove wiser than ourselves,” as Euler is alleged to have said. By this, he means that otherwise unwarranted doodling sometimes suggests pathways of reasoning that that allow us to capture natural phenomena within our linguistic nets in fashions that we could not have anticipated beforehand.'

'I believe that Quine, through his misplaced allegiance to theory T doctrine, failed to consider the key places where the adaptive adjustments of progressive pragmatism are actually implemented.'

'As an evaluative term, “cause” functions in very complicated ways within science and everyday life, and its application is particularly sensitive to the specific background modeling strategy utilized. In his own manner, Leibniz recognized some of the basic distinctions involved.'

'I do not regard the “sublime aprioriness of mathematics” as a firm philosophical datum and instead emphasize the great utilities that its evolved tools offer for improving the qualities of our humanly feasible reasoning schemes.

'Long ago I remarked that Wittgenstein’s writings function as a Rorschach test for philosophers: you can locate any doctrine you’d like in them thar words.'

'Cartwright has rightly noticed some of the methodological complexities inherent within real life modeling, but she has oddly reacted to such policies as if they represent scandals (her “lies”) rather than comprising rational tactics that philosophers need to understand better.'

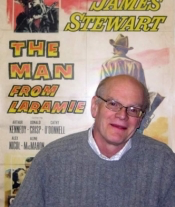

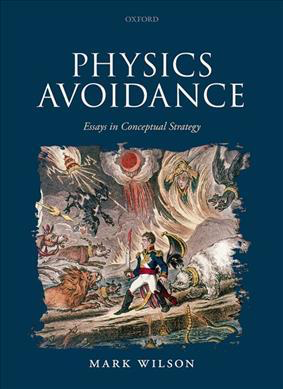

Mark Lowell Wilson investigates the manner in which physical and mathematical concerns become entangled with issues characteristic of metaphysics and philosophy of language; he is currently writing a book on explanatory structure. He is also interested in the historical dimensions of this interchange; in this vein, he has written on Descartes, Frege, Duhem, and Wittgenstein. Here he discusses why philosophers of science need fairly intricate scientific details, Euler's sise pencils, objections to standard “theories of concepts", Quine, causes and Hume, what goes wrong when thinking about thinking and meaning, the applicability of maths to physical systems, set theory as the ‘science of strategy’, whether he's a pragmatic Wittgensteinian, Nancy Cartwright, and finally the role of the philosopher of science.

3:16: What made you become a philosopher?

Mark Lowell Wilson: My older brother George (who eventually became a well-regarded academic philosopher himself) would bring home books from college by Quine and Wittgenstein, which I would eagerly gobble up (for reasons only known to the teenage mind). Among them were the philosophy of science tutors of the era, including Israel Scheffler’s Anatomy of Inquiry (which was perhaps the best book of that stripe). But the examples supplied of purported “laws of nature” were often wan generalizations of the ilk “phosphorous smells like garlic.” Even then, I knew that this could not be the stuff of which substantive science is comprised. But I went to a dreadful high school, so I had to wait until college to take a proper introductory physics course. And the first week or so went alright—Newton’s three laws and all of that—, but we soon shifted to beads sliding along wires. Well, I couldn’t figure out for the life of me how any of this new stuff followed from what had come before (I had absorbed a reasonable grasp of deductive rigor from Quine’s Methods of Logic). Plainly some practical wisdom must reside in proceeding so illogically, but I was damned if I could figure out what it was. And I have continued to mull this question over ever since. Sometimes I feel as if the compass of my entire career has been set by those bloody beads-on-wires.

3:16: You’re an expert in philosophy of science and one of the things you argue about is what you label the classical view about scientific theories and concepts as treated by modern philosophers. Can you first sketch for us what this classical view is and what’s the problem with it?

MLW: I don’t regard myself as primarily a philosopher of science but as a generalist in the mode of a Bertrand Russell or W.V. Quine. Indeed, the degree to which we have allowed our subject to fracture into a gulag of non-communicating sub-disciplines strikes me as a matter of considerable regret. But there are two primary reasons why fairly intricate scientific details have come to play an increasingly central role within my own thinking. The first reflects an old suggestion of my thesis advisor, Hilary Putnam: the well-documented history of science provides an excellent laboratory for investigating how descriptive vocabularies productively evolve over time. I am continually surprised to learn of the peculiar twists and turns that regularly emerge in this developmental context. Hilary later abandoned this agenda in favor of a Kripke-flavored apriorism, but I find that a less biased approach offers greater prospects for philosophical enlightenment.

Along this same path I’ve come to realize that schematic conceptions of “scientific structure” in the mode of the logical empiricists have seriously impaired our abilities to diagnose philosophical problems in the detail they require, even within subjects far removed from science proper. I often label these universalist propensities “the Theory T syndrome” due to the fact that issues are chiefly approached in a “Let T and T’ be two theories …” vein. Such loose discriminations encourage the conceit that philosophers can discourse glibly about “what science does” without much background in any topic beyond an undergraduate course in first order logic. In What is a Law of Nature? David Armstrong issues a proud proclamation of this character:

'[Even if philosophers know no actual laws, they] know the forms which statements of laws take ... It turns out, as a matter of fact, that the sort of fundamental investigations which we are undertaking can largely proceed with mere schemata of [the sort "It is a law that Fs are Gs].... Our abstract formulae may actually exhibit the heart of many philosophical problems about laws of nature, disentangled from confusing empirical detail. To every subject, its appropriate level of abstraction.'

I can’t think of worse advice to offer a budding philosopher.

Let me immediately add that I don’t believe in the least that “good philosophy” invariably hinges upon a mastery of scientific doctrine. For reasons I’ll explain later, our subject characteristically demands a close attention to applicational details, but these can equally arise within the spheres of law or everyday moral evaluation. At the current moment, however, simplistic theory T pictures of “what science does” have distorted open-minded inquiry across a wide array of philosophical concerns. Too many theses are articulated through appeal to invidious contrasts: “Unlike in science, in field X we do Y.” Progress may remain stalled within these topics until these faulty “contrasts with science” become dislodged. Indeed, I became interested in the mathematics of loaded beams after talking with my brother about the faulty stereotypes upon which Donald Davidson relies in his disquisitions upon human action. I’ll come back to these concerns later.

3:16: You also point to the fact that there have been anti-classical accounts – but that many of these accounts are no better than what they’re aiming to displace and don’t go anywhere worthwhile. Again, can you tell us what these anti-classicist accounts claim and why you think they are not much help? So why are the legacies of ‘theoretical content’ so important and hinder clear thinking about concepts - why is it important to highlight these developmental stages within the philosophical careers of ‘concept’ and ‘theory’ and to highlight motivational fundamentals over current debates – after all, not many philosophers are talking about them when they talk about concepts and theories?

MLW: One of the most salient features of our improving intellectual economy lies in the fact that “our pencils often prove wiser than ourselves,” as Euler is alleged to have said. By this, he means that otherwise unwarranted doodling sometimes suggests pathways of reasoning that that allow us to capture natural phenomena within our linguistic nets in fashions that we could not have anticipated beforehand. And even after we have entered these novel landscapes and availed ourselves of their empirical bounty, their underlying geography may continue to baffle and confuse, for we may fancy that we’ve reached India when it is actually the Bahamas. Catching up with the novelties to which our reasoning pencils have led us often represents a non-trivial intellectual task. I am fundamentally a pragmatic adaptationist at heart; our descriptive vocabularies gradually tighten their descriptive grip upon the world around us through gradually evolving better patterns for reasoning productively about it (other human developmental skills are important in these respects as well, but I have generally focused on adaptive reasoning patterns because the shaping roles of inferential advantage strike me as comparatively neglected within current philosophical thought).

My central objection to standard “theories of concepts,” be they classical in flavor or not, is that they rarely encourage a localized study of how these progressive adaptations unfold in operational detail (these frequently follow different patterns even with respect to subject matters that seem superficially similar). The very idea that philosophy requires a general “theory of concepts” strikes me as a mistake, a false ideal grounded in theory T misconceptions of task. Words like “concept” and “theory” represent useful classificatory terminologies within everyday discourse, but they also commonly shift their descriptive focus considerably according to context (in the same manner that regular terms such as “force” and “pressure” do). The hard-headed deflationists who blithely declare that “science has no need of ‘concept’ or ‘cause’” participate in the same neglect of symptomatic detail as their more “classical” counterparts. The “needs” are always there, but they are not always accommodated in the same way.

With respect to your original question, I would resist the presumption that some special notion of “theoretical content” (as opposed to “everyday concept”) exists. Ordinary words such as color terms depend upon supportive architectures every bit as complicated as those found in science, and I have concentrated upon the latter largely because clever applied mathematicians have already mapped out how some of the background apparatus actually works.

3:16: Why don’t you think Quine went far enough with his anti-apriorist stance?

MLW: I believe that, at core, Quine wished to articulate a “pragmatic adaptationism” much like my own; it lies at the base of his distrust of distinctions between the “analytic” and the “synthetic.” Unfortunately, the theory T predilections that he inherited from Russell and Carnap led him to freeze into rigidity the very joints within scientific reasoning that serve as the loci where the significant mutations of adaptive repurposing commonly arise. These diagnostic limitations largely trace to Quine’s desire to treat first-order logical arrangement as the chief methodological imperative of scientific development, in which all pertinent issues of inferential connection can be reduced to a “logically follows from premises A” format. But the chains of informational extraction that convince us that we have gained a more reliable grip upon natural behavior are rarely of this logical character; they are instead located within the longer strands of computation that scientists gradually cobble together, sometimes through essentially trial-and-error manufacture. As my avatar Oliver Heaviside once remarked, “Only afterward is the true logic of it known.” It is through this quasi-experimental “cobbling together” that science obtains the descriptive plasticity required to deal with the extraordinary complexities of the natural world. When we afterwards scrutinize these evolved patterns of reasoning in a post factoattempt to identify the strategic “logic” implicit in these practices, we commonly discover that the foundational percepts that we formerly regarded as constitutive of our inferential policies were profoundly mistaken. We had been fooled by what I like to call a “semantic mimic.”

In a nutshell, I believe that Quine, through his misplaced allegiance to theory T doctrine, failed to consider the key places where the adaptive adjustments of progressive pragmatism are actually implemented. These diagnostic limitations prevented his critique of “necessary truth” from probing as deeply as it might.

3:16: Hume famously couldn’t find causes and so dismissed them– was that because he was caught by the classical picture in some way and so was looking in the wrong place? And why do you like to go back to some of the early moderns – Leibniz in particular – is it because through them you can see the problems without the accumulating classical vision that has built up since? For example, what do you think Leibniz helps us see that is more difficult to see now?

MLW: As an evaluative term, “cause” functions in very complicated ways within science and everyday life, and its application is particularly sensitive to the specific background modeling strategy utilized. In his own manner, Leibniz recognized some of the basic distinctions involved, specifically by highlighting the differences between what we’d now call an equilibrium or steady state modeling (employing equations of so-called elliptic signature) and one that is evolutionary in character (using hyperbolic equations). These terminologies, of course, represent the modern codifications of applied mathematics, but they align fairly closely with what Leibniz called explanations involving “final” and “efficient” causes. As such, his two classes of “cause” correlate with important aspects of descriptive methodology that remain important to this day. Indeed, descriptive philosophy of science would be in considerably better shape if it had attended to these basic distinctions more closely. Hume, of course, did nothing of the sort and compounded the obfuscations by regarding impactive collisions of a billiard ball character as a prime paragon of what a successful “law of nature” should look like. Leibniz and Emilie du Châtelet realized that singular treatments of this type must piggyback upon smoother forms of underlying mathematical treatment, another moral that likewise holds firm with respect to real life billiard balls. In their own lingo, Leibniz and du Châtelet expressed these reservations in the form “nature does not make jumps,” and the distinctions to which they appeal remain relevant to understanding the complexities of “causation” as well.

Now if you are a priori determined to be univocalist about “cause”’s descriptive import (as most contemporary metaphysicians are inclined to do), then you will likely follow Hume in his rather empty “one size fits all” analysis of the term’s usage. Certainly, classical thinking about “conceptual content” either directly encourages this univocalism or significantly underestimates the wide divergencies in application that the term in fact displays. It’s a shame that the bulk of our philosophical literature pays so little attention to conventional applied mathematical wisdom on these scores.

3:16: Can you say what you think failure to consider our computational position has distorted when we think about thinking and meaning? What do you see as the big mistakes? Is all reference and truth local and context dependent? What are the most important implications of this for you?

MLW: I’d draw a distinction between different senses of “localization” here. Let’s begin with some evaluative terms whose word-to-world relationships are highly sensitive to localized application context. Prime examples for me are “force,” “cause” and “temperature.” After I wrote my first book (Wandering Significance), I was gratified to discover that huge gains in descriptive effectiveness can be achieved through the use of what computer scientists call “multiscalar architectures,” in which a network of submodels are linked together to simulate the interactions between scale lengths within a complicated substance such as a rock (an example I often cite). In particular, the technique utilizes clever forms of feedback loop in which the results within the individual submodels are subsequently subjected to an elaborate collection of cross-scalar checks-and-balances that correct for any localized modeling errors. In carrying out such policies, the descriptive imports of words such as “pressure” or “temperature” often become adaptively adjusted to suit the behaviors normally dominant at the particular scale length under consideration. These forms of localized coding can all be carried out without any need for descriptive vocabularies that span all scales in an universalist manner. It is likewise astonishing how many vital facts about the external world can become captured within the encompassing reasoning architecture itself, without being directly expressed as sentences anywhere (some of these schemes are truly beautiful in their division-of-computational-labor efficiencies). As such, these popular forms of computer modeling nicely precisify many of the looser suggestions I’d offered with respect to practical language use in Wandering Significance. So it was encouraging to find my hunches implemented in such concrete terms.

However, to get these checks-and-balances tactics to function properly, additional vocabularies are required to articulate the data relationships that knit the submodels together successfully (applied mathematicians call these inter-scalar comparisons “homogenizations”). It strikes me that, in everyday life, we employ the basic vocabularies of “truth” and “reference” as the natural vehicles for capturing these architectural relationships. In the case of our rock, we naturally say, “If a microscopic piece of the granite undergoes recrystallization, what becomes descriptively true on a macroscopic level? Answer: the rock turns into gneiss.” If we extend these ideas further, we can build up a portrait of “truth”’s practical utilities that is more closely allied to the spirit of Anil Gupta’s “revision theory” than it is to the Tarski T-schema. In these employments, the notion of “truth” itself doesn’t strike me as itself “perspectival” in a useful sense; it instead serves as a cross-perspectival terminology that we employ to correct for the scale-centered determinations of genuinely localized classifiers such as “force” and ”temperature.”

By employing terms like “reference” in a similarly evaluative fashion, we can articulate our current impression as to how particular bits of language manage to correlate concretely with external circumstance within a given setting. Here it often turns out that our initial impressions on these scores turn out to be deeply misleading (this is the phenomenon I called “semantic mimicry” earlier). Such considerations of word/world alignment can be reasonably classified as “metaphysical” in an old-fashioned sense, but the underlying issues cannot be properly addressed in the apriorist manner of a David Lewis (who covertly relies heavily upon the unhelpful distortions of theory T thinking). The devil really lies in the details, as the old saying goes.

3:16: How should we understand the applicability of maths to physical systems – how do we manage to bridge the gap between the sublime a prioriness of maths with scientific messy real world applicability? Is this another case where we need to understand our computational position in nature?

MLW: In the “Sixth Meditation,” Descartes suggests that we should determine the true characteristics of the external world by reverse engineering the perceptual systems we possess: “the world must be like X to explicate why we have been equipped with sensory receptors of type Y.” A judicious appraisal of exterior circumstance likewise demands that our reasoning policies be subjected to an allied form of “why do these computations produce reliable results?” critique (close attention to our gradually improving inferential capacities strikes me as a vital ingredient within a reasonable scientific pragmatism). Much of my research has concentrated upon the “semantic mimics” in which the worldly correlates of reasoning tactic X initially appear as if they reflect external conditions of type Y when closer scrutiny later reveals that they depend upon supportive arrangements of a different character Z (the science of optics is full of these referential surprises). Our mathematical heritage has developed a large array of sophisticated tests for gauging the potential reliabilities and defects of our reasoning tools, and many of these “tests” carry us into unexpected territories. Given two curves C and C’, how will they intersect when placed on top of one another? A successful answer requires that the interior characteristic of that little dimple at p on C be scrutinized closely. And the best way to do this is to blow up the point within a space of higher dimensions in which the hidden complexities sheltered within p become disentangled in a more humanly assessable manner (this tactic is called a “resolution of singularities”).

Why must we check our reasoning policies in this roundabout manner? An appropriate answer must begin with the basic limitations and biases inherent within the core capacities for reasoning that we have inherited from our hunter-gatherer ancestors. We find that we often can’t reliably evaluate the trustworthiness of the conclusions we reach about topic X until we have examined how allied policies behave when transferred to a setting Y of a seemingly disparate character. The familiar tactics of Euclidean proof supply sterling examples of the considerations I have in mind. Directly inspecting the situation of a right triangle with squares erected on its sides does not convey a reliable conviction that these areas add up in a Pythagorean manner, but after we encase the figure inside the fuller quiver of Euclidian constructions illustrated on the left of the diagram, the conclusion becomes forced upon us as unavoidable. But we have also learned that subtle cautions attach to these verification tactics as well. There are a host of variant reasoning pathways that will likewise carry us to the Pythagorean conclusion, sometimes in an easier to follow manner (e.g., the demonstrations that rely upon moving triangular pieces around). Wider experience, however, has also demonstrated that “proofs” of this second character are sometimes deceptive, which is why Euclid forbids appeal to motion in his demonstrations. The long and short of all of this is that framing trustworthy improvements upon our inherited collection of reasoning policies represents a delicate and ongoing intellectual task, in which we must often consider what arises within the faraway corners of recondite mathematics.

Returning to your original question, I do not regard the “sublime aprioriness of mathematics” as a firm philosophical datum and instead emphasize the great utilities that its evolved tools offer for improving the qualities of our humanly feasible reasoning schemes. The direct philosophical morals with respect to mathematics reside in the manner in which such thinking greatly enhances our abilities to reason productively about a wide range of topics, rather than reflecting a Platonic realm entirely unto itself. I don’t see any utility in crediting mathematical thinking with a single supportive “ontology” of its own.

I might remark that many of the nineteenth century opinions that Frege dismissed as “psychologistic” strike me as attempts to locate the “certainty” of mathematics within the improvements that its tools supply to human reasoning. I’ve recently written a little monograph on this background.

3:16: What is being claimed when you say that set theory should be regarded not as ontologically mysterious but as the ‘science of strategy’, the ultimate court of appeal whose authority rests on its historical success in illuminating inferential technique?

MLW: Embedding within set theory currently serves as our most reliable “final court of appeal” for certifying that, e.g., a suggested set of differential equations can be coherently credited with solutions. Or that a proposed sequence of “blow up” operations is genuinely well-defined. Careless critics of the subject often neglect these valuable verification capacities. On the other hand, I’m not inclined to follow those who contend that set theory provides a unitary “foundational ontology” for mathematics.

3:16: Some of your approach seems like a pragmatic Wittgensteinism approach. Would you characterize yourself as a Wittgensteinian in some sense?

MLW: Long ago I remarked that Wittgenstein’s writings function as a Rorschach test for philosophers: you can locate any doctrine you’d like in them thar words. In my researches I’ll often stumble across Wittgensteinian strains that strike me as potentially related to what I’ve been thinking about. Two pages later he‘ll offer something completely incongruent with what I had supposed. So I really don’t know.

I will say that if my limited understanding of his themes is at all correct, he doesn’t follow his own methodological recommendations closely enough. As stated before, conceptual confusions frequently arise when our improving reasoning schemes get ahead of our appreciation of their supportive ingredients. Sorting out these tensions in a convincing manner generally requires close attention to procedural details that appear insignificant at first glance. Insofar as Wittgenstein offers concrete suggestions along these diagnostic lines, they are sketchy and provisional. He is too often content to linger at the level of enigmatic hints.

I also don’t endorse his suggestion that philosophy should be regarded as “therapeutic” or “corrective of misuses of language.” It’s inherent in the very notion of human progress that our pencils sometimes get ahead of our understanding and that we need to catch up afterwards. But that is not “therapeutic”; it’s a hallmark of intellectual advancement.

3:16: Given the pandemic and the role of scientific expertise in leading policy, how should we understand scientific claims given that they are based on a wide range of modeling traditions and inferential practices. Are they ever true or is someone like Nancy Cartwright right to think all scientists lie if they claim to be giving us truths – again, is she just oversimplifying – or even ignoring - the way in which the scientific notions such as law and cause and so on are entangled in real life uses?

MLW: Cartwright has rightly noticed some of the methodological complexities inherent within real life modeling, but she has oddly reacted to such policies as if they represent scandals (her “lies”) rather than comprising rational tactics that philosophers need to understand better. She generally ignores the extensive banks of applied mathematical literature devoted to such topics and writes as if they didn’t exist. A better role model for a philosopher of science can be found in my colleague, Bob Batterman, who has looked more deeply into the background of these same tactics and has isolated a number of important structural relationships that we typically overlook in our crude theory T simplifications. Indeed, many of the procedures that Cartwright characterizes as “put up” jobs represent the asymptotic methods that Bob has properly identified as crucial ingredients for stitching the fabric of science together. Techniques of this sort greatly enhance the overall reliability of science, rather than diminishing it.

3:16: Finally, as a take home, what is the role of the philosopher of science? Given that we have scientists who seem to be able to merrily do their practical thing without any help, why heed the philosopher?

MLW: Conceptual quandaries can arise within every walk of life, and anyone who can help straighten them out should qualify as a “philosopher” (lots of deep mathematical work strikes me as of this character). By cultivating a broad awareness of previous dilemmas, a philosopher can contribute significantly to improving our intellectual circumstances by helping to unravel these tangles. Unfortunately, fashions within our subject currently reward armchair pontifications with respect to “science” and “language” generally, without any of the informed attention to practical application upon which proper resolutions characteristically depend. An adequate understanding of the acts we call “lies” must surely attend to the architectural surroundings of such deeds. Did shopkeeper Jones behave dishonorably to customer Smith when Jones said X to Smith? On first blush, X might appear to represent a straightforward factual fib, but, in light of the corrective safeguards that prevail within the encompassing society, perhaps not. I here allude favorably to J. L. Austin’s work, for his methodology with respect to contextual detail is not altogether distinct from my own (another contemporary advocate of “scientistic philosophy,” Penelope Maddy, has acknowledged a similar affinity with Austin).

However, I will immediately stress that I have deliberatively avoided straying into territories that carry any flavor of moral evaluation, partially because we first need to appreciate the positive utilities offered within the complicated checks-and-balances architectures of science, before we turn to broader stretches of evaluative application (which frequently conceal obnoxious social codes as well). I’ve never studied any of the writings of the French structuralists in any detail but believe that they frequently descended into implausible exaggeration through an eagerness to reach important social considerations prematurely. It’s better to first appreciate the merits of the benign forms of architectural complexity before we worry about the abuses.

It’s also the case that I simply lack the required sensitivities to the nuances of everyday linguistic practice that Austin exemplifies (my brother George is also much better at this than I am). But, as I remarked previously, admirable diagnostic skills of this character are much out of fashion nowadays, discouraged by the ersatz standards of “rigor” and “theory” characteristic of theory T thinking. So I see my own role as mainly one of tearing down these unhelpful obstacles.

ABOUT THE INTERVIEWER

Richard Marshall is biding his time.

Buy his second book here or his first book here to keep him biding!

End Times Series: the index of interviewees

End Time series: the themes