How Donald Trump's Bullshit Earned Him a Place in the History of Assertion

Interview by Richard Marshall.

Peter Pagin's area is philosophy of language, and within philosophy of language he has primarily taken an interest in the foundations of semantic theories and semantic concepts. In the last few years he has worked on the principle of compositionality for natural language. He has tried to develop two thoughts: on the one hand that compositional semantic theories contribute to explain the success of linguistic communication, and on the other hand that precisely this explanatory role is the foundation of semantic concepts like truth and reference.

His interest in the philosophy of language has taken Pagin into areas bordering on other disciplines, like philosophy of mind (sensation terms), logic and formal semantics (compositionality), history of literature (point of view markers) and cognitive psychology and psychiatry (speakers with autism). He has also written about rules and rule-following, vagueness, synonymy, assertion and about modern philosophers like Wittgenstein, Quine, Davidson and Dummett. Here he discusses what holism and compositionality are, Fodor and Lepore's claim that they're incompatible, Davidson, radical interpretation and pragmatic enrichment, assertion and what it is, social and non-social and normative and non-normative theories of assertion, Tim Williamson, Paul Grice, Robert Stalnaker, Donald Trump, de se communication and uncentred vs centred worlds, then vagueness, Moore's paradox, whether we can intend to be misinterpreted, empty names and what we can learn about the nature of language from individuals or systems that are unlike normal speakers.

3:AM: What made you become a philosopher, and why philosophy of language?

Peter Pagin: It was a bit of an existential detour. I was attracted to the subject already in high school, to topics such as skepticism and induction. I even liked Venn diagrams and truth tables. After high school, however, and after having done a semester and a half of math, I drifted into novels while doing my military service. This led me on to psychology and existentialism, and eventually back to philosophy. After another disorganized year and a half, I started taking classes at Stockholm University. Then, as an undergraduate, I had a non-analytic orientation, attracted both to the later Wittgenstein and to continental philosophy.

There was a strong appeal in Wittgenstein’s writings on language and communication. This interest kept me away from Husserl’s transcendental phenomenology and instead pushed me towards the hermeneutical side of continental philosophy. That side proved increasingly thin, however. I did not find much of interest in Gadamer, for instance. I ended up writing my magister (master’s) paper on the Tractatus.

Still ambivalent, I applied to the PhD program at Stockholm University and was admitted. At that time, in early 1982, Dag Prawitz had just succeeded Anders Wedberg as professor of theoretical philosophy, returning from his position in Oslo. He introduced me to things I had not known before, such as proof theory and theories of truth. In particular, he introduced me to Dummett’s writings. There I found a sense of depth I had not seen before in analytic philosophy, but which I was now helped to discover. And clearly, Logic and Language stood out as the most central area, with the most intriguing questions.

3:AM: One of the issues that you’ve discussed at various times throughout your work is that of meaning holism and compositionality. For the uninitiated, could you first sketch out what these two terms of art are about, when they began to be seen as important to the contemporary philosophical scene and what the stakes are in this particular area?

PP: The basic idea of meaning holism is that what meaning a simple expression has depends on its interaction with other expressions in the same language. Expressions interact mainly because they combine to form sentences, and in combining to form a sentence, they interact because their meanings jointly contribute to the meaning of the sentence. In a sense, then, meanings come in clusters, not one by one.

Semantic compositionality is the idea that the meaning of a complex expression is determined by the meanings of its (immediate) parts and the way they are combined. This is rather intuitive and similar ideas have been around for a long time, for instance in medieval philosophy, even though the current versions are quite recent. To my knowledge, Barbara Partee was the first to state it in its contemporary form.

These two ideas are in tension. Compositionality seems to explain how you can understand new complex expressions that you have never heard before, because you know the meanings of the simple parts, and you know the semantic significance of putting parts together. Then, step by step, you can work out the meaning of the new sentence.

By holism, on the other hand, it seems that in many cases you need to know the meaning of complete sentences before you know the meaning of the their parts, opposite to what compositionality seems to suggest. Clearly, it is not obvious how these two ideas go together, and yet, both seem true.

3:AM: Fodor and Lepore are two of the big guns that have argued that meaning holism and compositionality are mutually exclusive haven’t they. Compositionality looks right to me so the holism has to go. This would cause big problems for the likes of Donald Davidson, Brandom, Churchland and McDowell et al, wouldn’t it? Is this how you see it or am I getting it all wrong?

PP: Among those you mention, Donald Davidson is the one whose philosophy I know best. In Davidson’s theory, one version of semantic holism is built-in, but it is compatible with compositionality. The idea is this. As an interpreter you notice that a speaker (of a language that perhaps is new to you) holds certain sentences true. We suppose you have an hypothesis about their syntax: which expressions they contain and how these expressions are combined. Now, you assume that the language has a compositional semantics. You will try to find meanings of simple expressions together with the significance of the modes of composition such that the resulting meanings of whole sentences, together with extra-linguistic facts, render a sentence true if it is held true by the speaker.

It is a bit like solving a system of equations: find the values for the variables that make the equations come out true. A value given to one variable constrains the possible values for the others. How this is done is determined by the form of the equations. Similarly, the meaning assigned to one expression constrains the possible meaning assigned to others. And compositionality is assumed in determining how this is done. So, on this view, holism in fact depends on the assumption of compositionality.

One difference between interpretation and equation solving is that, in the interpretation case, if you cannot find a total hypothesis that makes it all add up, you may conclude that some of the sentences in fact aren’t true; they are just held true by the speaker because the speaker has a false belief. The interpretation was right, but the speaker was wrong. You can’t treat a system of equations like that.

Fodor and Lepore argued, based on the views they ascribed to Davidson, and on their understanding of so-called inferential role semantics, that if some speaker changes his mind, e.g. starts holding a sentence false that he previously held true, then the meaning the sentence, and therefore also the meaning of at least one its parts, must change as well. And then, because of the pervasive interdependence of meaning between expressions in the language, the meaning of all expressions change, and thereby also the contents of all beliefs expressed by its sentences. This is the so-called instability argument: you can’t change your mind. By the same token, two speakers cannot disagree over just one proposition.

However, as several philosophers have pointed out, me among them, holism does not require that the meaning of a sentence changes just because a speaker changes his attitude to it. For instance, the same meaning assignment to the sentence as before may still be part of the best interpretation of the speaker. If so, the speaker just changed a belief. More generally, holism itself does not require that meaning changes at all just because there is some change in the non-semantic facts on which meaning supervenes.

3:AM: Where does this leave the idea of radical interpretation and pragmatic enrichment?

PP: Radical interpretation as Davidson conceived it is based on the idea that it is possible to identify a speaker’s attitude to a sentence (holding true, holding false, being agnostic) without knowing what the sentence means. And then the interpreter, as we said above, shall find meanings of words together with the significance of modes of composition that combine to a meaning that, together with the facts, makes the sentence true if held true. It is assumed that the speaker’s attitude to the sentence is the result of two factors: what the sentence means and what the speaker believes.

But suppose there are more things than the meaning of the sentence and the beliefs that determine the speaker’s attitude. Suppose that the speaker reads more into the sentence than what is literally expressed. In the pragmatics literature, this is called enrichment. One of the most famous cases is from Robyn Carston:

(1) He handed her the key and she opened the door.

A standard reading of this sentence adds material in the understanding, as follows:

(1′) He handed her they key and she opened the door [with the key that he had handed her].

The bracketed italicized phrase in (1′) displays what we are disposed to read into (1).

As the current pragmatics literature shows, such enrichments are ubiquitous. But what happens then with radical interpretation? If the speaker holds the sentence true in part because of the enrichments, then maybe the interpretation goes wrong if the interpreter only fixes the meanings of the words.

I have argued that interpretation does not go wrong. This is because, if the enriched sentence is true, so is the original sentence. The former entails the latter. You get the right interpretation by finding meanings of the parts that make the original sentence true.

But there appears to be a complication in negative contexts. Look at

(2) a. He handed her the key but she didn’t open the door.

b. He handed her the key but she didn’t open the door [with the key that he handed her].

Here (2b) doesn’t entail (2a). Does interpretation go wrong in this case? No, because in this case, the enrichment isn’t made. We don’t read the enrichment of (2b) into (2a). We read that sentence as saying that she didn’t open the door at all, not just not with that particular key. Hence, the interpretation method still works. I have argued that this is the general pattern.

3:AM: This leads to another related big issue you have thought long and hard about - assertion. This is an area that involves many dimensions of concern for philosophy of language – pragmatics, speech acts, norms, intentions, cognition and self- representation et al – so perhaps you could give us a brief tour of the geography of this issue?

PP: Let’s focus on the big question: What is assertion? – and ignore many other important questions, e.g. concerning indirect assertion and presupposition, as well as truth and knowledge attributions. We can categorize the main types of assertion theory by two independent distinctions: between social and non-social theories and between normative and non-normative theories. Social theories characterize assertion in terms of its social significance: to assert something is to somehow change the relation between speaker and hearer. Normative theories characterize assertion in terms of norms governing the practice of assertion.

The currently dominating type of theory is normative and non-social. It says that the speech act type of assertion is governed by a norm that is unique to assertion, i.e. does not govern anything else. Often it is said that the norm is constitutive of assertion, in a way analogous the way games are constituted by their rules. The first one to propose this way of thinking about assertion was Tim Williamson, in 1996. He proposed the knowledge norm:

(K) One must: assert p only if one knows p.

Others have later proposed alternative norms, such as the truth norm and the belief norm:

(T) Assert only what is true.

(B) Assert only what you believe.

These accounts relate the speaker to the norm, not to the hearer, although norms of that kind have also been suggested. For instance, Manuel García-Carpintero has proposed such a theory, a theory which is both normative and social. Sandy Goldberg’s theory in his recent book attaches great significance to the role of assertion in the social transfer of knowledge. However, the norms he takes to be viable candidates are all epistemic in character, not intrinsically social.

Another type of normative and social account is the normative commitment type: in making an assertion to a hearer, the speaker incurs a commitment to the hearer. If what she asserts isn’t true, the speaker fails to live up to this commitment. According to some other versions, the commitment concerns e.g. backing up the assertion with evidence if challenged, or accepting blame if the assertion is flawed. Such accounts are normative in case the theory says that the commitment obtains even if the speaker does not regard herself as so committed: the speaker just makes the assertion, and then there is a norm that creates the commitment.

There are also non-normative commitment type accounts. According to such a theory, it is a matter of speaker psychology that the commitment is made: making an assertion that p simply consists in committing oneself to the truth of p. In this case, if the speaker does not regard herself as having made the commitment, then she hasn’t made the assertion. It is not always clear whether a theorist uses ‘commitment’ in the normative or in the non-normative sense. Either way, these types are social types: making an assertion consists in changing the relation between speaker and hearer. After the assertion has been made, the speaker has a new commitment to the hearer.

The other main type of non-normative social theory characterizes assertion in terms of communicative intentions. This means that the speaker makes an assertion just in case the utterance is made with certain intentions concerning the resulting mental state of the hearer. Such a mental state can be e.g. to believe what the speaker has said, or to recognize what the speaker intended.

These types of account all derive from Paul Grice’s 1957 work on meaning, where a speaker’s non-naturally meaning something consists in having a set of communicative intentions. These accounts are social in that assertion is characterized in terms of hearer- directed intentions.

We can add here a theory that has been attributed to Robert Stalnaker: to make an assertion is to propose an update to the common ground in a conversation. The common ground, in Stalnaker’s theory of the dynamics of conversation, is what speaker and hearers mutually accept for the purpose of the conversation. The speaker proposes to the hearers to add the proposition asserted to what is accepted. However, Stalnaker has also acknowledged other ways of making such proposals than by asserting (in particular, assuming).

Finally, there are non-normative, non-social theories, which are more of a cognitive nature. This is the least popular type, and my own theory belongs to it (so does Mark Jary’s). The brief statement of my own theory is that an utterance is an assertion just in case it is prima facie informative, i.e. prima facie made (partly) because it is true. This means inter alia that part of the speaker’s reason for making the utterance is that the proposition expressed is true (i.e. by the speaker’s lights). And a hearer who takes the utterance as an assertion treats the making of it as a prima facie reason for believing the proposition. This theory relates an utterance to a degree of belief in its content, either in making the utterance or in observing the utterance. No thoughts about the speaker or about the hearer need be involved.

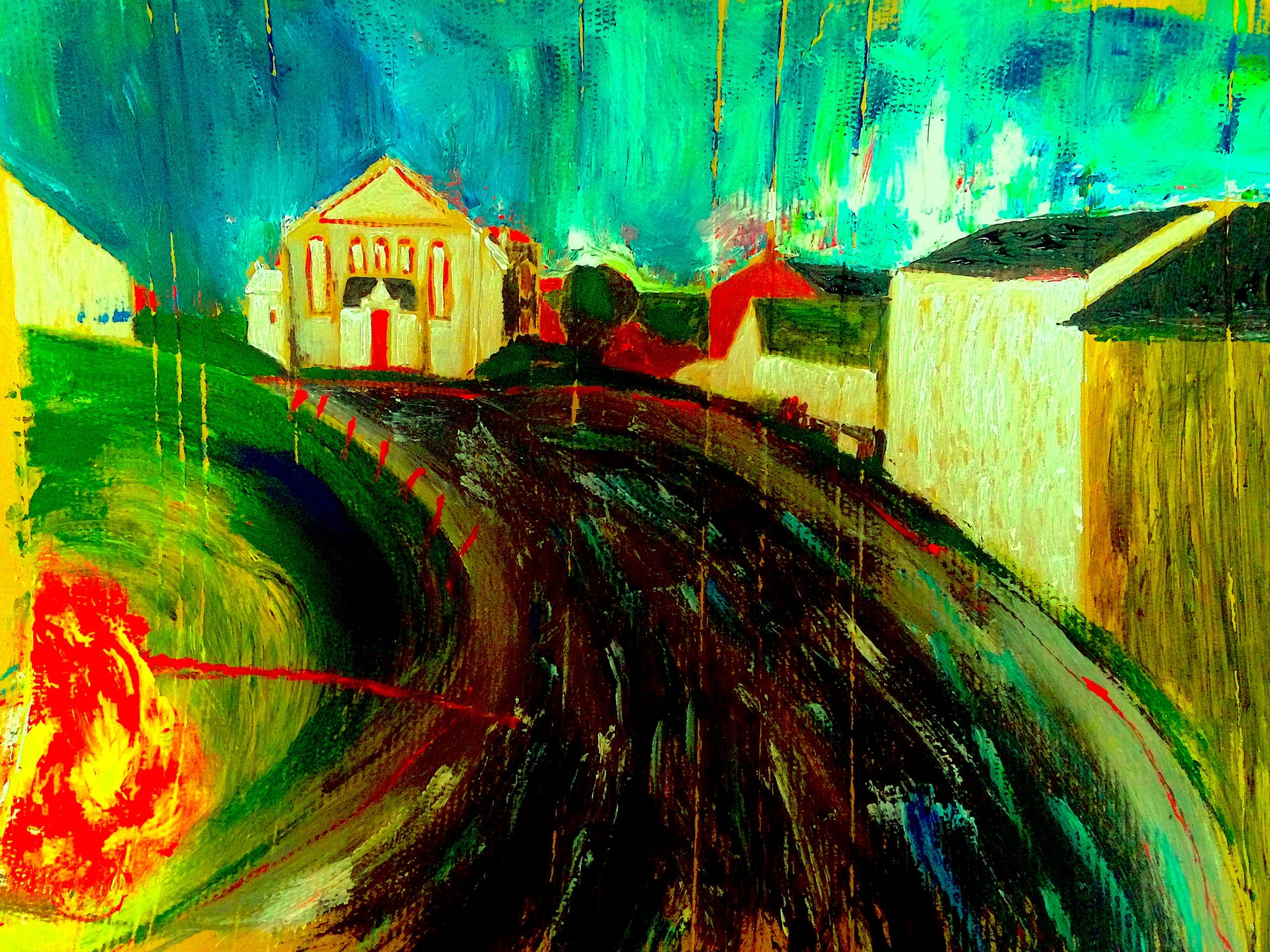

This theory stresses the central role of ways of expressing a proposition so as to make it come across as worthy of belief. These are the ways by which we recognize utterances as assertions. Basic features are grammatical form (typically, declarative sentence type) and prosodic profile (e.g. falling pitch). But other features of the mode of delivery play a role as well, making some particularly expressive speakers more easily perceived as trust- worthy, irrespective of whether they are. Donald Trump is a case in point. Despite a documented record of 5-6 false or misleading public statements per day, polls show that more than a third (37 percent) of the people asked perceived Trump as honest. He has earned a place in the history of assertion.

3:AM: Are you saying that actually we don’t really need norms at all and that when we look at empirical evidence for their existence we can’t find any?

PP: I don’t think the (possible) existence of norms of assertion is a problem in itself. What is problematic is the job they are supposed to do in theories that postulate them, and the evidence that has been marshaled for their existence.

One main problem with norm theories of assertion is that they typically involve the claim that knowledge of the norm of assertion (preferred by the theory) is what constitutes the understanding of what assertion is. For instance, on the knowledge account (Williamson’s), the speaker knows what assertion is by knowing that it is the unique speech act governed exactly by the knowledge norm.

Now if that is so, and if we all take part in the same assertoric practice, and all know what we are doing in making assertions, it follows that we all accept the same norm. But, as a matter of empirical fact in the philosophy of assertion literature, we don’t. Some of us reject norms, but even among those who accept that assertion is norm-governed, theorists differ substantially over what the norm is. Not only can’t they all be right, but according to the part that they all agree on – that we know what assertion is via knowing what norm governs it – most speakers, or at least most assertion specialists, either don’t know what assertion is, or else know what they themselves are doing (knowledge- asserting, perhaps) but are wrong about what the others are doing (something else, like truth-asserting, or belief-asserting, or ...). In fact, theorists seem to agree about which utterances are assertions, but they often disagree about when they are correct (in the sense of being in accordance with “the norm of assertion”). The idea that they know what assertion is by knowing which norm governs it then seems utterly misguided. I have characterized it as putting the cart before the horse.

A second problem with the norms of assertion literature is the appeal intuitions as evidence. The evidence here is of the form: asserting p in circumstances c is proper/improper (correct/incorrect). There is a widespread tendency to treat intuitions that seem to tell against ones own theory as tracking something else than correctness in the strict sense (“proper warrant”), such as “blamelessness” or “secondary propriety”. This makes the theories hard to falsify. The situation is even worse, since in several cases, the theory is expanded to include secondary propriety as an alternative status, which means that intuitions that prima facie disconfirm the theory actually confirm it. The theories are then not just hard to falsify, they are even hard not to verify, by appeal to intuition. Since this holds for several, mutually incompatible theories, a massive underdetermination results: a report that a subject finds an assertion correct/incorrect will be treated by different theories as tracking different properties and hence as confirming different theories. The situation is clearly untenable.

Finally, I have objected to the appeal to linguistic evidence. The evidence proposed has the form of conversational patterns, such as responding with “How do you know that?” to an assertion. This is claimed by many, including Williamson himself, as supporting the knowledge norm. I have argued that evidence of this kind is neutral between normative accounts and corresponding non-normative alternatives. For instance, the non-normative alternative theory that a speaker who asserts that p represents herself as knowing that p (a social or non-social theory, depending on what representing oneself amounts to), would be equally well supported by the pattern in question as the normative theory. All in all, my view is that the norm approach to assertion currently is not in good standing.

3:AM: Doesn’t this deal a heavy blow against the likes of Wittgenstein, Quine, Davidson, Dummett and Kripke who are all committed to norms aren’t they?

PP: Well, Quine and Davidson were anti-normativists, so they would have no trouble. Kripke, and perhaps Wittgenstein, were in favor of norms of meaning, but it is not so clear that either was in favor of norms of assertion, in the current sense, although Kripke spoke of correct assertions. Dummett also spoke of correct/incorrect assertions, but not with an appeal to norms in the current sense. He is still a little different, since he in addition made appeal to conventions of assertion. He did not, however, try to explain what assertion is by appeal to such conventions. One of Dummett’s memorable statements on the matter is this: “A man makes an assertion if he says something in such a manner as deliberately to convey the impression of saying it with the overriding intention of saying something true.” (Frege: Philosophy of Language, p. 300).

3:AM: What’s the issue about de se communication and uncentred or centred worlds about? What difference does it make if we assume a centred or uncentred world here? And where do you stand on this?

PP:The brief history of the question is as follows. To some extent anticipated by Hector-Neri Castañeda, John Perry in the late 1970s introduced the problem of essential self-thoughts, de se thoughts, which are thoughts of a subject X that are not only in fact about X, but (subjectively) essentially so. If I think “I am hungry”, this is a thought essentially about me, but if I think “Peter Pagin is hungry”, it is not. I might be under the false belief that Peter Pagin is someone else. Even if it in some sense is metaphysically necessary that I am Peter Pagin, it could have been that, for all I knew, I might not be him.

The problem discovered has two sides. On the one hand, knowing that I am Peter Pagin seems to be a piece of knowledge that I might and might not have, and therefore to be a possible belief content. On the other hand, (then) current models of belief content did not acknowledge such distinctions in content. For instance, on Kripke’s and Kaplan’s accounts, there is no difference in content between “I am hungry”, as uttered or thought by Peter Pagin, and “Peter Pagin is hungry”, as thought by anyone. Moreover, description theories would not help, because for whatever description the F, that I might associate with my name, I could doubt that I am its referent.

So, what to do? The question was discussed at the time by Perry, Kaplan, David Lewis, and Stalnaker. Perry himself distinguished between the belief content and the belief state, where the de se character was manifested in the belief state but not in the content. Lewis had a different idea. In 1979, he proposed a new type of content, which he then called properties but which today is usually called centred-worlds propositions. They are typically modeled as sets of pairs 〈w,c〉 of a possible world and a center, and the center is itself usually a pair 〈x,t〉 of a person x and a time t. On this idea, the indexical sentence “I am hungry” expresses a centered-worlds proposition p, true at a centered world 〈w,〈x,t〉〉 just in case x is hungry at t in w.

As Lewis noted, subjects don’t believe propositions in the traditional sense, but rather self-ascribe centered-worlds propositions. You and I can self ascribe the same centered- worlds proposition, expressed by each of us by means of “I am hungry”. For Kaplan, we would thereby express different contents, but for Lewis it is one and the same. You ascribe the proposition to yourself at a time, and I ascribe it to me, at perhaps the same time, and in the same world. Ascribed to me it is true, and ascribed to you it is, perhaps, false. The de se element enters exactly in the self-ascription part: the thinker does not represent herself in the content of the sentence “I am hungry”. By contrast, “Peter Pagin is hungry” represents a particular person by that name as being hungry, regardless of who is in the center in the act of self-ascription. There is therefore, in Lewis’s model, a difference in content between the de se ascription of hunger to oneself and the ascription of hunger to a represented person who in fact is identical with oneself.

So far, so good. In 1981, Stalnaker pointed out that this model spells trouble for communication. If I tell you “I am hungry”, and you, as in the standard communication model, react by thinking what I am thinking, you will not think that I am hungry, but that you are hungry, because you will self-ascribe the same content. Hence, the standard model of linguistic communication is incompatible with the theory of centered-worlds propositions as the meanings of sentences.

This is how the matter stood until a little over ten years ago, when interest in relativism increased. Several people, including Andy Egan and Dilip Ninan, turned to the communication problem again. Ninan and others proposed various new solutions to it. Could any of those solutions work?

I had been impressed with the communication objection against centered-worlds propositions and I had come to the conclusion that requirements on communication is something speak in favor of the classical proposition. Standard models of propositions represent them as sets of indices, whether these indices are possible worlds, pairs of worlds and times, centered worlds, or yet something else. The classical proposition, modeled as a set of possible worlds, has the feature that speaker and hearer are guaranteed to be at the same index. This means that when the proposition intended by the speaker is the same as the one recovered by the hearer, the truth value of the speaker proposition will be the same as that of the hearer proposition. Thereby, the classical proposition is well suited for the transfer of knowledge: it provides a better grasp of how testimony works, for I can come to know what you know thanks to your telling me and me believing the same as you. This condition is not met by centered-worlds propositions or other relativistic propositions, such as Kaplan’s temporal propositions (set of world-time pairs).

That is why I was interested in learning whether any of the solutions to the communication problem for centered-worlds propositions would work. I ended up arguing against it, successfully, I still think. Centered-worlds propositions are fine as contents of thoughts at particular times, but as soon as it concerns transferring a thought, by communication to another person, or via memory through time to oneself, they get you into trouble. All the tricks I have seen for saving them either don’t work at all, or lead to new problems, or else reduce the centered-worlds propositions to classical propositions by undoing what is peculiar to them.

Of course, this lands us back on square one as regards the de se. On the other hand, I also have some ideas about that, connected with my general views on communicative success.

3:AM: So can you summarise your thinking on assertion before we move on?

PP: Assertion is one of the most basic forms of language use and one of the most common forms of communication. We don’t theoretically understand what speakers do in asserting, and what hearers get in comprehending, if we don’t have a model both of the content of what they are saying and of the significance of saying it in the ways characteristic of assertion, i.e. with assertoric force.

I still think the theory, sketched above at the end of the answer to question 5 above, which I proposed in the Jessica Brown & Herman Cappelen volume from 2011, is basically correct. An utterance is assertoric just in case it is prima facie informative, i.e. made partly because it is true. Other types of speech act are not related to truth in that way.

3:AM: Paradoxes throw light on how language and meaning work by high- lighting breakdowns in assumptions. You’ve been writing about this since pretty much the beginning when you reviewed Sorensen’s Blindspots book. One issue you’ve raised about vagueness and the sorites is about ‘phenomenal vagueness’ and the non-transitivity issue. Can you say what the problem is here and why you think Raffman and Fara are wrong to think that non-transitivity is not an issue and that we still face the problem of appearances as identified by Dummett and Armstrong?

PP: Our knowledge of the world is fallible. It can easily be that things are not the way they seem. But at least I know how things seem to be. It cannot really be that an object seems red to me but I'm wrong about that, and believe it seems green. Or so it seems.

David Armstrong and Michael Dummett argued against this view about appearances. The structure of the argument is the following. First, we assume that there are definite appearance properties. Second, we assume that appearance properties are what they seem: if an appearance property seems to be such-and-such, then it is such-and-such. Third, we note, as a matter of empirical observation, that sameness of appearance seeming is not transitive. That is, we can observe two patches, #1 and #2 together, and they seem to be of the same color. That is, it seems that their color appearances are identical. Hence, by the principle that appearances are what they seem, these two appearances are identical. Then we compare patch #2 with patch #3, and they too seem identical in color, and hence their color appearance are identical as well. By the transitivity of identity, the appearance of #1 is also identical with the appearance of #3. However, when we compare #1 directly with #3, they seem different, and therefore, again, they are different. But now we have contradiction.

One possible conclusion, which Armstrong and Dummett drew, was that appearances (or sense data/sensory items) don’t exist. Jackson and Pinkerton objected, and they were followed later by Diana Raffman and Delia Fara (whom we very sadly lost last summer). The objection was that how things appear depends on the context. When compared with patch #1, patch #2 may look different from it does when compared with patch #3. This gets us out of applying the transitivity of identity, and we have avoided the contradiction.

I objected to this contextualist counter-move. To simplify somewhat, I argued that the problem is not avoided, only shifted. The reason is simply this: perhaps the appearance of #2 changes between the comparison with #1 and the comparison with #3, but it seems not to change, and by the principle that appearances are what they seem, it doesn’t change. The same goes for the other contextual changes. But this means we can set up a new chain of identities, a little more complicated by taking the time of appearance into account, but still leading to a contradiction, of essentially the same kind as before.

We are therefore still saddled with the original problem: either definite appearance properties don’t exist, or they are not invariably what they seem.

3:AM: How does domain restriction save ordinary usage from vagueness and the sorites? You have argued that with a bit of work tolerance, bivalence and consistency can all be retained. How can this be done?

PP: Let me first emphasize that the aim of my theory is different from most theories of vagueness and from all main stream theories. Unlike other theories, mine is not aimed at solving the sorites paradox, i.e. at showing that, contrary to appearances, the paradox does not arise. Rather the aim is to show how we can live with tolerance and thereby with the risk of ending up with a contradiction. As long as we aren’t forced by contextual factors to reckon with more than what the semantics can handle, tolerance, bivalence, and consistency hold. And when context forces us into a contradiction, the result, on my view, as that interpretation gives out; that is, there is no longer any contextual semantic interpretation that satisfies all requirements.

That said, let me sketch the ideas. The first observation is that standard formulations of tolerance principles, such as

(3) If a man of x + 1 mm in height is tall, then a man of x mm in height is tall as well. are ambiguous between two different readings in predicate logic, not equivalent to each

other. They are roughly as follows:

(4) a. If there is a man who is at most x + 1 mm in height and who is tall, then any man who is at least x mm in height is tall as well.

b. If any man who is at least x+1mm in height is tall, then any man who is at least x mm in height is tall as well.

Now, it turns out that (4a) is the right way of stating tolerance and (4b) is wrong. To see why the second is wrong, consider a scenario where all men are either at least 180 cm and tall, or at most 170 cm and not tall. Then consider Sven, who is exactly 170 cm tall. Anyone who is at least 1 mm taller then Sven is tall, since anyone who is at least 1 mm taller than Sven is in fact at least 10 cm taller than Sven. So, the antecedent (left hand part) of (4b) is true for x = 1700. But the consequent (right hand part) is false, for Sven himself is not tall. So, (4b) is a conditional with a true antecedent and a false consequent, and hence false.

(4a), on the other hand, passes as true. It is clearly true for x > 1800. Assume, to go on, that Aidan is 180 cm tall. So, for x = 1799, because of Aidan, the antecedent of (4b) is true. But the consequent is true as well, because anyone at least 1799 mm tall is at least 1800 mm, and hence tall. For x = 1798 mm, the antecedent is false (nobody is at most 1799 mm and tall), and therefore the conditional is true, and the same goes for x < 1798. Hence, (4b) is true throughout.

The next crucial observation is that (4a) does not lead to contradiction in this domain. We do get the intermediate conclusion that anyone at least 1799 mm in height is tall, but we can’t go from there to the next conclusion that anyone at least 1798 mm in height is tall. Because there is nobody of height 1799 mm in the domain, the antecedent of (4b) for x = 1798 is false, and we cannot then apply modus ponens to the conclusion that anyone at least 1798 mm in height is tall. The chain of inferences stops there.

What I have exemplified now is that in cases where there is a “gap” in a scale, such as that of heights in mm, that is, a segment where the values of the scale aren’t instantiated in the domain in question, and that gap is larger than the tolerance level (1 mm in the example), then the tolerance principle is true but no contradiction results.

Ok, fine, but what about the cases where there is no such gap? What I have suggested is a contextual semantics that introduces such a gap in the interpretation. This is meant as a semantic model of speaker psychology. When I tell you e.g. that “Max is tall”, I take it that Max has a height above some threshold for being tall, and that there is some thresh- old below which people aren’t tall, but we don’t have to bother with the exact boundaries. We just disregard a problematic middle area. It doesn’t matter for the tallness verdicts I am passing at the moment, concerning Max and perhaps a couple of others.

Suppose that, as I think we in fact do, we use vague predicates like that. How is this practice modeled in the semantics? It is modeled by means of domain restriction. The domain of individuals we are talking about in a particular conversational context is restricted by subtracting from it (in the set subtraction sense) a subset of individuals with scale values in some middle segment of the scale. For instance, if you tell me that Max is tall, I could subtract from the domain of adult males all those with heights between 170 cm and 180 cm. This means that I interpret the utterance by means of the assumption that such men simply are not in the domain we are talking about. As regards the semantics, it is as if they did not exist.

Once such a restriction in the semantic is in place, a tolerance principle, such as (4a), can be accepted and yet not lead to a contradiction. We have consistency and we have tolerance. We also have bivalence, for anyone in the domain is either above the gap in height, and hence the predicate is true of him, or else below, and then it is false of him. Placing the gap of the scale, such as between 170 cm and 180 cm, is part of the contextual semantics. Where the gap is located is arbitrary within certain boundaries.

This condition reveals that the combination of tolerance, consistency, and bivalence has its dangers. Bivalence (in a non-free logic) requires that terms refer. So if the proper name of a man, say ‘Bill’, is used in a particular context where height is discussed, the sentence ‘Bill is tall’ must have a truth value. This means that the height of Bill, e.g. 176 cm, cannot be in the gap of the height scale. The boundaries of the gap must be so fixed that 176 cm is either below or above. As a consequence, if more and more people are mentioned in one and the same context, the location of the gap, and the size of the gap, can come under increasing pressure. In the worst case scenario, there is no place left on the height scale where a sufficiently large gap can be located so as to simultaneously preserve tolerance, consistency, and bivalence. In that case, no acceptable contextual semantics is available. Interpretation gives out.

These are the main ideas of the account. I would deem it successful only if it very rarely happens that interpretation gives out in this way (whether we notice it or not). I believe this condition is met. That is not the only challenge for the theory, but if we think that the tolerance intuition is strong and respectable, as I do, we need to come up with some theory that explains how we can use vague language without all the time ending up in contradiction.

3:AM: Moore’s paradox is another of those paradoxes that throw up interesting insights into language and thinking. How do you understand the paradox (Williamson I think says that it isn’t strictly a paradox because it isn’t strictly a contradiction) and what do you think it shows us about informativeness?

PP: On the currently dominant understanding, a paradox is an argument that leads from intuitively true premises by intuitively correct inferences to an intuitively false conclusion. Moore’s paradox is not obviously like that. Rather, we have intuitively absurd statements, like

(5) a. It is raining but I don’t believe it is raining. b. It is raining but I believe it isn’t raining.

What is paradoxical is that these statements, although intuitively absurd, may well be true. That is not a paradox in the sense above. However, if you add the intuitively true premise that what is absurd is false, you do get a contradiction. On my view, Moore’s paradox is truly paradoxical because of this. It is common to refer to this particular brand of oddity as “Moorean absurdity”.

The dominant type of view regarding Moore’s paradox is that we can account for Moorean absurdity by showing that the assumption of the truth of a Moorean statement, together with plausible principles concerning belief, that is, with principles of doxastic logic, leads to a contradiction. The approach to the paradox is due to Jaakko Hintikka, 1962. One consequence of the approach is that one sees little or no difference between Moorean utterances and Moorean thoughts: Thinking (5a) is deemed equally problematic as asserting it. It is even considered a condition of adequacy of an account of the paradox that it be equally applicable to utterances and to thoughts.

I think the entire approach is misguided. It is in fact easy to see that it at the very least faces an explanatory burden. Why would the fact that you can derive a contradiction from a statement p together with a set of plausible other statements have the effect of rendering p absurd? A statement isn’t normally absurd just because it is false. It isn’t normally absurd, except in the the sense of being false, because of being inconsistent with plausible principles and facts. And it isn’t normally absurd by being inconsistent except in the very sense of being inconsistent. If Moorean absurdity doesn’t normally pertain to a proposition just because it is inconsistent, or inconsistent with some plausible principles, then we certainly haven’t explained why Moorean propositions are absurd by deriving a contradiction from them by appeal to plausible principles. I think the dominant approach simply does not deliver.

A Moorean statement does have an absurdity that is pretty peculiar. If someone were to assert (5a) to you, you would feel like the rug was pulled away from under your feet. You start believing that it is raining, and then you learn something that may be true, and doesn’t contradict the rain but takes away your basis for believing it. That, in my view, is the phenomenon we should account for. The standard approach doesn’t. That said, believing a Moorean proposition is indeed incoherent, and I do think that the main current approach to Moore’s paradox, the doxastic logic approach, is apt for the thought version. However, what strikes you as absurd in Moorean statements, from a hearer’s perspective, is not that it is incoherent of the speaker to think or say it. If it were so, since incoherence is so frequent, we would experience Moorean absurdity pretty much every day.

I first started thinking about Moore’s paradox while working on my dissertation and, in the context of discussing Dummett’s views, writing about assertion. It struck me then that Moore’s paradox could be seen as a condition of adequacy for theories of assertion: any theory of assertion that renders Moorean statement non-paradoxical is false. Years later, I pursued this idea. I found out that the main argument could be made without reference to Moore’s paradox. The paper, a criticism of (what I call) social theories of assertion, was published in 2004.

A few years after that, and after finishing the first edition of the Stanford Encyclopedia entry on assertion, I returned to the relation between assertion and Moore’s paradox, and it now occurred to me that the paradox does have a direct connection with the nature of assertion. On my view on assertion, briefly explained at the end of the reply to question 5 above, an assertion is prima facie made partly because it is true. Therefore, the speaker’s utterance prima facie gives the hearer a reason to believe what the speaker says.

The standard connection between the truth of what is said and the saying is the speaker’s belief : it is because your belief reflects the facts that your utterance reflects the facts. Then, if you deny that you believe (a conjunct of ) what you assert, then I as a hearer, see the basis for believing what you assert removed. But when the basis is removed, the utterance is no longer prima facie informative. In making a Moorean statement, the very content is such that if it is true, the hearer loses the default reason for believing it. This, I think, is what the Moorean absurdity consists in. And on the account of assertion I have proposed, once the content of the utterance has been grasped by the hearer, the assertoric force itself is removed. Assertions are prima facie informative, and Moorean content cannot (in normal scenarios) be informative, hence they cannot (in normal circumstances) be asserted, despite being possibly true. I think that is paradoxical enough.

3:AM: Another paradoxical sounding questions – can we intend to be misinterpreted? Is this an example of an acceptable contradiction – and is this an issue for pragmatics or semantics?

PP: I considered the question in a comment on a paper by Petr Kotatko in The Aristotelian Society. Kotatko was arguing against Davidson’s claim that if the speaker intends to say that p by means of an utterance, then she also intends and expects to be interpreted by the hearer as saying that p.

Kotatko constructed an alleged counterexample to this, where, he claimed, the speaker does intend to be misinterpreted. The case was one where the speaker intends a term to have its customary meaning but believes (and hopes) that the hearer has a false belief about what that meaning is. I objected against Kotatko that the speaker had a primary intention that the term be used with its standard meaning, and that she also intended the hearer to interpret the utterance as having its standard meaning, in agreement with the speaker; the difference would only arise in the secondary intention/expectation that depended on what speaker and hearer believe about the standard meaning. So, in my opinion, the proposed counterexample doesn’t work.

Kotatko has later defended it against my objection. He rejects the primary/secondary distinction in this case, claiming that his example is not an example of deferring to the standard meaning (I think that it is).

I also briefly discussed the possibility for the speaker to intend a misalignment of values of indexicals. The example was ‘now’, as contextually ambiguous between the time of writing and the time of reading. I argued that this is not, in normal circumstances, psychologically possible, in the sense that the speaker cannot have two different times simultaneously in mind in the production of the token of ‘now’. Something unusual that I thought is possible is to intend a later utterance to be misinterpreted, where that intention is independent of the later intention with which the utterance is eventually made. But that is not paradoxical at all.

3:AM: What’s the interest in the statement ‘Vulcan might have existed , and Neptune not’? Why has the problem of empty names proved so difficult and do you have any idea how best it might be solved?

PP: This is the title of a paper co-authored with Kathrin Glüer, and part of our Switcher Semantics project (now a book in the making). We had earlier, in a 2006 paper, applied the Switcher Semantic ideas to proper names in modal contexts, in order account for the modal intuitions that Kripke marshaled for his rigidity thesis, while at the same time allowing names to have a non-rigid intension. The trick was to let names refer to their actual world referent in modal contexts, but not outside. Now we wanted to apply the same technique to the case of empty names.

The problem is difficult, because we have a strong intuition that sentences, at least simple sentences, containing a proper name cannot be true unless the name has a referent. But we also have the intuition that negative existentials such as

(6) Zeus doesn’t exist.

are true, which requires the name ‘Zeus’ not to have a referent.

That is difficult enough, but it gets even more difficult when taking modality into account as well. The sentences

(7) a. Vulcan might have existed.

b. Neptune might not have existed.

both are plausibly true. Neptune (correctly postulated by Le Verrier) seemingly could have failed to exist (there might not have been a planet causing the perturbations of the orbit of Uranus). But in standard semantics, the truth of a modal sentence such as (7b), depends on the truth of the embedded sentence (‘Neptune does not exist’) with respect to some possible world, and then we are back to the normal problem of negative existentials.

What we did to solve these modal puzzles was to introduce a hidden linguistic operator that switched the interpretation of the name to the world closest to the actual world where the name does refer (or closest worlds, in case there is more than one and the truth value is the same in all of them). So, (7a) is true if there are any (closest) worlds where ‘Vulcan’ refers. And (7b) is true just in case there is a world where the actual referent of ‘Neptune’ (since the actual world itself is the closest world) does not exist. And, applying the same idea for non-modal sentences, (6) is true if there is a closest world where ‘Zeus’ refers to an individual, and that individual does not actually exist.

The theory delivers the intuitively correct truth values, but at the expense of relying on cross-world reference even in non-modal sentences. Some might not agree that this is a price worth paying.

3:AM: Finally, a left field question: AI is clearly interested in getting machines to use language and Williamson said that one of the reasons why vagueness, for example, was so important was that it was something a good language user has to be able to use. So is handling sentences like ‘If Pedro owns a donkey, he beats it.’ AI experts are not getting AI cars to learn how to drive without the humans understanding the algorithms they’re using to do that. Is it possible that we might be able to get machines to learn language use – including the use of vagueness and so on – but the machine solutions may be inaccessible because too complicated for people to understand? Do you think this possible and do you think this makes philosophy of language more or less important?

PP: Let me focus on two aspects of this question. They both have to do with what we can learn about the nature of language from individuals or systems that are unlike normal speakers. The first concerns those that have limited capacities. In 2003 Kathrin Glüer and I published a paper that based philosophical conclusions on the existence of speakers which have a good syntactic-semantic capacity but bad pragmatic abilities and that apparently have limited mentalizing abilities. The ones we were concerned with are speakers with autism, of a verbal mental age of about 11 years that did not pass so-called false-belief tests. At the time, it was pretty standard to take failing a false-belief test to show that the subject does not understand what belief is — because they don’t seem to understand that people can have false beliefs. That seemed right to us (but it is controversial, and the controversy seems to have deepened in the years after the paper was published). We concluded that the Gricean approach to natural language meaning, which involves complicated higher-order communicative intentions, typically about the beliefs of the hearer, must be empirically false. The speakers in question clearly make and understand assertions, ask and answer questions, and so on. So, meaning non- naturally, in Grice’s 1957 sense, seemed not needed for being a natural language speaker. Some people objected that these speakers were not fully or genuinely users of natural language, but we took this to be a move that rendered the Gricean theory unfalsifiable.

Now, I think it quite possible that we could learn something analogous from an artificial system. Suppose we can get a computer to seemingly master a sufficiently large fragment of a natural language, and at the same time definitively lack certain behavioral abilities that we deem essential to some particular cognitive or emotional capacity, then indeed this would be strong evidence that this capacity is not needed for being a natural language speaker. We are not yet there. For instance, the state of machine translation, as far as I know, does not yet support the conclusion that the translating systems have any sort of understanding of what is translated.

The other aspect concerns what to conclude if we really were to construct a system good enough to pass some reasonable Turing tests. Suppose, as you suggest, that we wouldn’t understand how it worked, because the application of the algorithms were too complicated to follow. This is, I have been told, how it presently is with connectionist deep learning algorithms. We would then be in a situation somewhat analogous to the situation we are in with respect to human speakers, since we don’t understand very well how human speakers/hearers function.

My guess is that in such a situation, we might very well think that we could gain more theoretical knowledge by simply managing to understand more of the working of the implementation of the algorithm. And perhaps we would not be sure whether the system worked so well because of carrying out the algorithm or because of deviating from it. There is no reason to think that philosophy would play no role in such a development. On the contrary, I think its role would be quite central.

It is also possible, as some have claimed about our ability to solve the mind-body problem, that we would conclude that we lack the capacities to understand how the system works, except for the very basics. Somehow, deep in the complex processes, something seemingly magical happens, and we might despair of ever being able to understand what or how. We might react that way, but I don’t think we would. We have such a strong drive to increase our knowledge and understanding. And who knows, after all, maybe the answer we are looking for is just around the corner.

3:AM:And for the readers here at 3:AM, are there five books you could recommend to take us further into your philosophical world?

PP:I shall mention five books that were crucial to me during the formative

years of my time as a PhD student and shortly after. It is not a very original list:

– Frege’s philosophical writings, e.g. as in Translations from the Philosophical Writings of Gottlob Frege.

– Quine’s Word and Object

– Davidson’s Inquiries into Truth and Interpretation

– Dummett’s Frege: Philosophy of Language

– Kripke’s Naming and Necessity

Let me add just one paper to this list, an amazing paper that I kept re-reading as a student and young researcher and recently returned to again, still finding it incredibly good: Dag Prawitz’s ‘Meanings and Proofs: On the Conflict Between Classical and Intuitionistic Logic’.

ABOUT THE INTERVIEWER

Richard Marshallis still biding his time.

Buy his new book here or his first book here to keep him biding!