Credence: What To Do When We're Not Certain

Interview by Richard Marshall.

Richard Pettigrew'srecent research has been mainly in formal epistemology. In recent papers, he has pursued a research programme that he calls epistemic utility theory. The strategy is to appeal to the notion of purely epistemic value as well as to the techniques of rational choice theory to provide novel and purely epistemic justifications for a range of epistemic norms. So far, he has considered the norms of Probabilism, Conditionalization, Jeffrey Conditionalization, the Principle of Indifference, and the Principal Principle. He has a second research interest in the philosophy of mathematics, where he defends a variety of anti-platonist positions, such as eliminativist structuralism and instrumental nominalism. Here he discusses what a credence is, its pedigree, what an irrational credence is, what 'no drop' is, credence and accuracy, omniscient credences and uncentered propositions, the squared Euclidean distance, why we really can't live up the standards of rationality demanded by this, and what to do, the role of epistemic value, Probabilism, the Principle Principle, Indifference, updating, and understanding accuracy-first epistemology as something of an analogue of hedonic utilitarianism in epistemology. He ends by discussing the problem of how we decide something knowing we’re going to be changed in the future. Roll on...

3:AM:What made you become a philosopher?

Richard Pettigrew:It was my high school English teacher who put me onto the idea. In Scotland, at least when I was young, you could spend your last year of high school studying for what was called a Certificate in Sixth Year Studies (CSYS). You could get these in any subject, and I opted for Maths, Pure Maths, and English. There was a sort of tacit agreement amongst the Scottish universities, at least back then, that any offer of admission they made to a prospective student would not be conditional on the results of the CSYSs; any conditions they placed would be on the results of the previous year’s qualifications. So you studied for these CSYSs purely out of interest, and not because of any leg up that it would give you into university. As a result, the classes were less focussed on the final exams or course work, and involved lengthy discussion periods; in fact, looking back, they were a perfect preparation for university. This was particularly true in my English class, since only two of us were taking the course. I think it was in these discussions that my English teacher and I found a mutual love for questioning assumptions, picking apart arguments, calling out nonsense. We remained close friends for a long time after I left school. Maybe to encourage me, maybe to provide an outlet for my questioning, she gave me a copy of Jostein Gaarder’s book, Sophie’s World, which includes a strange plot device that allows him to include long expository passages on the history of Western philosophy. I looked back at the book recently, and I didn’t recognise anything about it. But I remember clearly that it made a huge impression on me at the time and made me determined to study philosophy. I’d been trying for months to decide what to apply to study at university: first, piano performance; then acting; then English literature, art history, or mathematics. But when I learned about philosophy, that settled it, not least because it seemed to allow you to think about literature or art or theatre or mathematics as part of the subject.

I think I would have studied philosophy only — in the UK, you decide your subject of study, or major, before you start on a degree programme — but I had my heart set of going to Oxford, having watched too much of the Alec Guinness production of Tinker, Tailor, Soldier, Spy, and having read too much Evelyn Waugh, and Oxford didn’t and doesn’t offer philosophy as a single subject, only as part of a pair or trio. So I applied to study mathematics and philosophy. No-one from my school had applied to Oxford or Cambridge before, and so I had no idea which college I should try, and the open day had just left me wanting to live in all of them. So I submitted what they called an open application, and the computer assigned me to Brasenose College, which is where I ended up — and, although that college is a rowing and rugby college with a tendency to skew right on political matters, and I was a sports-phobic leftie, it was just as exciting and wonderful as I had imagined. Since then, I’ve sort of oscillated between being a mathematician (my PhD is in Mathematical Logic, looking at the relationship between very weak theories or arithmetic and equally weak set theories) and being a philosopher (my Masters programme was in philosophy, and my current position is in the philosophy department at Bristol). And now I seem to have settled working on topics that sit somewhere in between the two subjects.

3:AM:You’re an expert in laws of credence. As we start, perhaps you could briefly introduce this field and clarify what you mean when we talk about the philosophical terms credences , degrees of belief and doxastic states. What are they, what issues have they emerged from and what’s at stake in all this?

RP:The concept of credence is very ancient and very widespread. It is just the concept of partial or graded belief. So a credence is a degree of belief in a proposition; it is a level of confidence in that proposition. So, when I say that I’m pretty sure I locked my front door when I left the house this morning, but I’m not certain, I’m reporting a credence. I’m reporting that I have a high credence that I locked my door, but not the highest credence possible, since the highest credence possible amounts to certainty. Similarly, when I say it’s as likely as not that Ngugi will win next year’s Nobel Prize for Literature, and about time, I’m saying that my credence that Ngugi will win is the same as my credence that he’ll lose. And again when I say I’m 95% confident it will rain tomorrow. So credences are graded doxastic states that a subject like you or me or Ngugi or a robot can be in. They are doxastic states because, like our beliefs and unlike our desires, we use them to represent the way the world is. And they are graded because they come in degrees: I can have high credence it will rain tomorrow, low credence, middling credence, quite high credence, vanishingly small credence, and so on.

The notion of partial belief is very ancient and very widespread. Think of Carneades’ appeal to probable impressions in his fallibilist epistemology, or Cicero’s declaration that he does not proclaim the truth, like a Pythian priestess, but rather conjectures what is probable. We find it in the Ayurvedic writings of Charaka, who appeals to a notion of credence or probable belief to give an account of how we should make medical decisions in the absence of certainty either about the cause of the illness or about effective treatments for it; what is known as yukti in Indian philosophy. And again, it occurs in Albert of Cologne, who attributes it to Islamic philosophers and theologians, though he doesn’t identify them. The notion plays a significant role in Locke’s Essay(Book IV, Chapters XV-XVI) and a starring role in Hume’s celebrated sceptical arguments concerning causation, induction, and testimonial evidence of miracles (Treatise1.3). It occurs less prominently in Descartes and Kant, since their concern is with certain knowledge, and mere probable belief is a temptation from which they wish to save us. In more recent times, starting in the 17th century in Europe, and as a result of Fermat’s and Pascal’s mathematical interest in the theory of gambling, the notion has been studied more formally, culminating in a sort of golden era during the previous century, which saw the development of Bayesian statistics, the theory of decision making under uncertainty, and formal epistemology. Credences — our modern notion of partial or probable belief — are now used systematically in all areas of science; they guide rational action; and they allow us to give nuanced accounts of our doxastic attitudes that are missing when we merely report what it is that we assent to or believe.

Typically, but not always, my credence in proposition is measured using a real number at least 0 and at most 1. Credence 1 is maximal credence; credence 0 is minimal credence. If I am 95% confident it will rain, I have a credence of 0.95 that it will rain. And so on. That’s the model that I use in the book. But others model credences not as single numerical values but as ranges of values. These are called ‘imprecise probabilities’ and they capture something that the ancients thought about credences, namely, that they are sometimes incomparable — my credence that Jeremy Corbyn’s Labour Party will win the next UK general election is neither greater than, less than, nor equal to my credence that Elizabeth Warren will win the next US presidential election.

3:AM:Before you explain how you think we should understand irrational credences could you give an example of one?

RP:Here are a few:

I’m 90% confident that it will rain tomorrow, and 50% confident that it will be dry.

I know for sure that the coin in my hand is a fair one, as likely to land hands as to land tails. I’m 5% confident it will land heads and 95% confident it will land tails.

You tell me you’ve got two new dogs, Dibble and Dobble, one is black, the other brown. You tell me nothing about which is which. I am 99% confident that Dibble is black and Dobble is brown.

3:AM:OK. So, what has the notion of ‘no drop’ to do with laws of credence, and what makes it irrational to have a credence function that violates no drop?

RP:Suppose I know you’ve just picked a number below 5, so either 1, 2, 3, or 4. And suppose my credence that your number is below 3 is greater than my credence that your number is below 4. That is, I’m more confident that your number is 1 or 2 than I am that your number is 1, 2, or 3. Then I’ve violated No Drop. I’m more confident in a proposition than in one of its logical consequences. That’s just what No Drop says: don’t be more confident in one proposition than you are in any other than it entails.

So No Drop is a principle connecting logical facts with normative claims about credence. It’s the sort of thing that John MacFarlane calls a bridge principle when he’s asking whether or not the laws of logic are normative for thought. It’s a conditional with a logical condition in the antecedent (A entails B) and a normative claim about credences in the consequent (your credence in A shouldn’t exceed your credence in B). In fact, it seems to be the bridge principle that nearly everyone agrees upon. In Joe Halpern’s wonderful book Reasoning About Uncertainty, he considers a bunch of different views about the structure of rational credences. Some of them are really very different from the orthodox Bayesian picture that I defend in the book. But they all agree at least on No Drop!

How do we justify No Drop? Well, interestingly, people often feel it’s just obvious once it’s understood. If A entails B, then the set of possible worlds at which A is true is contained in the set of worlds at which B is true. So the latter set is at least as large as the former. So the credence assigned to the latter must be at least as great as the credence assigned to the former. Or so they claim. They often draw out a Venn diagram to illustrate this. But notice that this argument depends on a strong assumption, namely, that we obtain the credence in a proposition by adding up the credences in the worlds at which it’s true. And actually that assumption is stronger than No Drop itself — in fact, it’s very close to Probabilism. So we need a different justification.

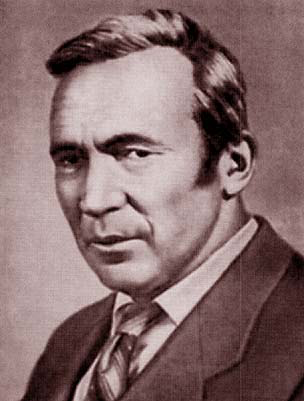

[Bruno de Finetti]

Enter Frank Ramsey, Bruno de Finetti, and their so-called Dutch book argument — better, the sure loss argument, since this doesn’t cast aspersions on the Dutch. Suppose I have higher credence that your number is 1 or 2 than I have that it is 1 or 2 or 3. Then think about the following two bets: the first pays me £1 if your number is 1 or 2 and pays me £0 otherwise; the second loses me £1 if your number is 1 or 2 or 3, and loses me £0 otherwise. Then, I’ll be willing to pay more to participate in the first bet than I will need to be paid to participate in the second bet. So, at the start, before the bets pay out, I’ll have paid out more than I’ve been. Now, suppose your number is 1 or 2. Then I receive £1 from the first bet and lose £1 from the second; so overall, including the money I paid and received to participate, I’m still down. Next, suppose your number is 3. Then I receive £0 from the first bet and lose £1 on the second; so overall, I’m down again. Finally, suppose your number is 4. Then I receive £0 from the first bet and lose £0 from the second; so overall, again, I’m down. So, as a result of my credences, I’ve fixed my prices for participating in a series of bets so that, at the end of it all, I’m guaranteed to be down. That is, I suffer a sure loss — I could have known ahead of time that I would lose. And it’s my No Drop-violating credences that have led me to this sorry state of affairs. So they must be irrational. This is the sure loss argument for No Drop.

Is the sure loss argument satisfactory? It certainly identifies a flaw in credences that violate No Drop. As I see it, our credences play at least two roles in our lives (I’ll mention a third later on). They guide our actions and they represent the world. Now, the sure loss argument shows that credences that violate No Drop play the former role badly — they guide our actions badly; they lead us into betting behaviour that results in certain loss. But it says nothing about how well those credences represent the world. And it seems to me that that role is the more fundamental. We appeal to our credences to guide our actions precisely because we know that the outcomes of our actions depend on how the world is, and so we want to consult our representation of how the world is when we decide which actions to take. So, while the sure loss argument might show that credences that violate No Drop are irrational in some sense, they fail to identify what is wrong with those credences as representations of how the world is.

Enter Jim Joyce and his accuracy-dominance argument for No Drop (and, indeed, for Probabilism more generally). According to this, credences can be more or less accurate as representations of how the world is. Joyce identifies ways to measure this accuracy, which we’ll meet below, and then he shows something remarkable: if your credences violate No Drop, then there are alternative credences in the same propositions that are guaranteed to be more accurate than yours; that is, there are credences that are guaranteed to represent the world better than yours. And this, he claims, renders your own credences irrational. And notice, it shows that your credences are irrational by showing that they are guaranteed to play the role of representing the world suboptimally.

3:AM:If credences aren’t true or false then how is one more accurate than another?

RP:I’ve been discussing this with Scott Sturgeon recently, and it’s made me rather regret using the word ‘accuracy’, which I took from Joyce’s initial treatment. You can really spell out the central idea without talking about accuracy. The point is that we would like to measure the purely epistemic value of a credence in a proposition, e.g., your credence of 95% that it’s going to rain tomorrow. On the account I favour, the so-called accuracy-first account of epistemic value, this amounts to saying how good that credence is purely as a representation of the world. In particular, it says that the epistemic value of a credence in a true proposition increases as the credence increases, while the epistemic value of a credence in a false proposition increases as the credence decreases. Put differently, from an epistemic point of view, being more confident in a truth is better than being less confident; and being less confident in a falsehood is better than being more confident. This is sometimes put by saying that, on this account, the epistemic value of a credence is its accuracy. But here ‘accuracy’ is really just a term of art; it isn’t very closely linked with our day-to-day notion of accuracy.

3:AM:How do you understand the notions of omniscient credences and uncentered propositions, and why are they helpful in explaining how credence function accuracy?

RP:So I said that, on the accuracy-first view, the epistemic value of a credence in a true proposition increases as the credence increases, while the epistemic value of a credence in a false proposition increases as the credence decreases. But this leaves open lots and lots of ways in which the epistemic value of a credence might be measured. For instance, the rate at which the epistemic value of a credence in a truth increases as the credence increases might be uniform — it might remain the same for the whole gamut of credences. In that case, the epistemic value of a credence increases linearly with the credence. But it might be quite different. For instance, the rate at which the epistemic value of a credence in a truth increases as the credence increases might itself increase linearly with the credence. In that case, the epistemic value of a credence increases in a quadratic manner. And so on. So we need a way to specify which of these different ways of measuring the epistemic value of a credence are legitimate. Joyce tries to characterise these directly, but I’m not so convinced by his solution. In the book, I tried a different tack; an indirect route to characterising measures of purely epistemic value.

The idea is this: the reason we value having higher credence in a truth and lower credence in a falsehood is that the epistemic value of a credence is measured by its proximity to the epistemically ideal credence. So it’s a perfectionist account of value: value is proximity to an ideal. To fill in this account, we need two components: first, I need to tell you what the epistemically ideal credences are; second, I need to give you a measure of distance between assignments of credences so that you can use that to measure how close a given assignment lies to the ideal assignment. The omniscient credences are those that assign maximal credence (100%) to truths and minimal credence (0%) to falsehoods. And the first component of my perfectionist account of epistemic value says that the ideal credences are the omniscient ones. So the reason that we value credences in truths more the higher they are is that they are thereby closer to the ideal credence in those truths, which is 100%. And similarly, the epistemic value of a credence in a falsehood increases as the credence decreases because the credence is thereby moving close to the ideal credence in that falsehood, which is 0%.

[Frank Ramsey]

3:AM:Ok, so can you try and unpack what the squared Euclidean distance is for the non-technical amongst us and say how it helps explain doxastic irrationality? (Ha, Good luck on that one!!!)

RP:The second component of my perfectionist account of epistemic value says that the distance between two assignments of credences should be measured by squared Euclidean distance. So what is that? The best way to understand it is visually, I think. Let’s suppose our friend Taj has picked a number less than 5, so 1, 2, 3, or 4. You and I only have credences in two propositions, namely, Taj’s number is 1 or 2, and Taj’s number is 1, 2, or 3. Then our credence in each lies between 0% and 100%. So now imagine we’re both standing inside a square room. We’re going to stand in a position in this room that represents our credences in these two propositions. How far between the left and right walls you stand is determined by your credence that Taj’s number is 1 or 2. So, if your credence is 50%, you stand halfway between the left and right walls; if it’s 75%, you stand three-quarters of the way between them; and so on. And how far between the bottom and top walls you stand is determined by your credence that Taj’s number is 1 or 2 or 3. Then the Euclidean distance between my credences and yours is just the standard distance between me and you in the square room. Squared Euclidean distance is then obtained by squaring that.

Now the crucial fact on which the accuracy-dominance argument for No Drop depends is this. First, think of the omniscient credence functions in the case we’re talking about. To specify those, think of the different ways the world might be. First, it might be that Taj’s number is 1 or 2, in which case the omniscient credences are 100% in both propositions; second, it might be that Taj’s number is 3, in which case the omniscient credence in the first is 0% and in the second is 100%; third, it might be that Taj’s number is 4, in which case the omniscient credence in both is 0%. So these omniscient credences stand at three of the corners of the room: top-right, top-left, and bottom-left. Suppose my credence that Taj’s number is 1 or 2 exceeds my credences that it’s 1, 2, or 3. So I violate No Drop. Then it turns out that there is an alternative set of credences I could have had — that is, an alternative position in the room where I could have stood — that is closer to each of the omniscient credences. So, by my perfectionist account, those alternative credences are guaranteed to have greater epistemic value than my credences. What’s more, we can pick this alternative so that it satisfies No Drop.

3:AM:Can we really expect to live up the standards of rationality demanded by this? If it’s likely to be beyond most of us most of the time why should we worry about irrationality? It’s too idealistic to be worth worrying about some might say.

RP: I think this is a very important point, and I sorry to say that I don’t address it in my book at all. Fortunately, there are lots of interesting things to say about it. And even more fortunately, the philosopher Julia Staffel and her computer science co-author Glauber de Bona have said a great many of them already (hereand here), and Julia is currently drafting a book where she lays them out systematically. I’ve said a little about how to fix up our credences when they’re irrational here.

You’re absolutely right that most of us can’t hope to achieve the sort of rationality that I delineate in the book. If you have credences in a large number of logically-related propositions — and it seems likely that you do — then even the weakest of the norms for which I argue — namely, Probabilism — is very demanding indeed. So the question is urgent. But the resources for answering it are already present in the perfectionist account of epistemic utility that I sketched above. After all, that account includes a measure of the distance from one credence function to another. And, using this, we can define a measure of how irrational your credences are: it is the distance from your credences to the nearest rational credences. So this gives us a measure of irrationality. And Staffel and de Bona show that, if you decrease your rationality along a direct path from your irrational credences to the nearest rational credences, you pick up some of the advantages of being rational — there are still credence functions that are guaranteed to be better than yours; but you are guaranteed to get better and better as your move closer to them. So we now have a story about the ideal and why it’s the ideal: we should be rational, and the reason is that we wish to maximise our epistemic value. But we also have a story about why we should try to get as close to the ideal as we can, given we’ll often fall short: getting closer in the right way increases your epistemic value for sure.

3:AM:What’s the general law of decision theory and what’s the connection between epistemic value and irrationality here? And what’s the link between all this and weather forecasting?

RP:One of the main contentions of the book is that facts about the rationality of credences are determined by facts about their epistemic value. So what I’m offering is what Selim Berker would call a teleological view. Now, we usually think of decision theory as a theory of which actions an agent should choose given her utilities over the possible outcomes of those actions (and, sometimes, also her credences over the states of the world). But it’s really a lot more general than that. It’s really a set of bridge principles that allow you to determine which options of a certain sort are rational given the values of those options at different states of the world. Those options might be actions that an agent could choose, in which case their values at different states of the world would be the utilities of their outcomes in those states of the world. But the options could just as well be scientific theories, in which case their value might be the epistemic value of those theories. Or, as in our case, they might be credences and again their value is their epistemic value.

I don’t really want to say that there is a single general law of decision theory. Rather, there are many laws, and each acts as a bridge principle to turn an account of value into a principle of rationality. Here’s one, for instance, that plays an important role in accuracy-first epistemology: Suppose o is an option; and suppose there is another option o* that is guaranteed to have greater value than o; then o is irrational. This is the principle I call dominance. It’s the principle that Joyce uses in his argument for Probabilism, and it’s the one I adapt and then use in my closely-related argument for Probabilism.

[Kolmogorov]

3:AM:Can you summarise for us your position on Probabilism?

RP:Probabilism is a particular way of extending No Drop beyond the very restricted case in which you have only credences in two propositions, one of which entails the other. Probabilism governs your credences however many you have, so long as there are finitely many of them. It tells you how you should set credences in tautologies and contradictions (maximal and minimal credence, respectively); and it tells you how to set credences in an exclusive disjunction on the basis of your credences in the disjoint disjuncts (it should be the sum of the credences in the disjuncts). It’s a very natural set of laws of credence and it mirrors the laws of probability that govern gambling situations. It’s really the set of principles for credences that Kolmogorov distilled from all of the work on gambling and games of chance that was carried out in the wake of Fermat’s and Pascal’s pioneering work. And nowadays it’s used all over the sciences.

The question I try to answer in the first part of the book is this: what makes it irrational to have credences violate Probabilism, that is, credences that don’t satisfy Kolmogorov’s axioms for the probability calculus? And the answer is the same as in the case of No Drop: if your credences violate Probabilism, there are alternative credences over the same propositions that are guaranteed to have greater epistemic value than yours; and there are no further credences that are guaranteed to do better than those alternatives. So, for instance, let’s think again about the example I gave above in which I’m 90% confident that it will rain tomorrow, and 50% confident that it will be dry. Then there are credences that I could have had instead of those that are guaranteed to be closer to the ideal — that is, closer to the omniscient credences. In fact, in this case, it turns out that 70% confidence that it will rain and 30% confidence that it will be dry will do the trick.

3:AM:You argue for versions of a Principle Principle don’t you. What’s this?

RP:Probabilism is the most basic law of credence that I treat in the book; and, it seems to me, it is the most basic law of credence there is. The Principal Principle is a further law of credence, and it isn’t so basic. Probabilism tells you how credences in propositions that are logically related to one another should interact; the Principal Principle, on the other hand, tells you how credences in propositions and credences in hypotheses about the chance of those propositions should be related to one another. In brief, it says this: your credence in a proposition should be your expectation of the chance of that proposition. Let’s unpack that a bit. Suppose I give you a coin. I tell you that it either came from the trick coin factory that makes coins that have a 70% chance of landing heads, or it came from the factory that makes coins that have a 40% chance of landing heads. But I tell you nothing more. What should be your credence that the coin will land heads? Given your lack of information about which factory it comes from, you might have equal credence in the two possibilities — 50% in each. In that case, your expectation of the chance of heads will be (0.5 x 0.7) + (0.5 x 0.4) = 0.55. So, by the Principal Principle, your credence in heads should be 55%. This is the credal principle that I violate in the example I gave above, where I know that the chance of heads is 50%, but I assign a credence of 5% to the coin landing heads.

We have an accuracy-first argument for Probabilism, which I’ve talked about a bit above: if your credences violate Probabilism, there are some alternative credences over the same propositions that are guaranteed to be better than yours. But we also have an accuracy-first argument for the Principal Principle: if your credences violate the Principal Principle, there may be no alternative credences that are guaranteed to be better than yours; but there are alternative credences that the chances expect to be better than yours on all the possible hypotheses concerning what the chances are — so these alternatives are guaranteed to have greater objective expected epistemic value than yours. And this, according to the law of decision theory that I call Chance Dominance, is enough to render your credences irrational.

3:AM:And you go on to elaborate a further principle, that of Indifference. What’s this, and how does this principle, along with the Principle Principle and Probabilism get combined in your approach?

RP:So the Principle of Indifference is another law of credence; and again, like the Principal Principle, it is not as basic as Probabilism. It pertains particularly to the first moment in your epistemic life — the moment before you collect any evidence at all. And it tells you how you should set your credences in the various possibilities that you consider at that moment. Indeed, it tells you that you should assign equal credence to all of those possibilities. So basically it captures the intuitive idea that, without any evidence that favours one possibility over another, all possibilities are equally likely; equal credence is the default, which we should adopt unless we have evidence that draws us away from it. This is the credal principle I violate in the example of Dibble and Dobble the dogs. I have no information that tells more in favour of Dibble being brown and Dobble being black, than in favour of Dobble being brown and Dibble being black. But I assign 99% credence to the latter.

Just as we have an accuracy-first argument for Probabilism and the Principal Principle, so we have one for the Principle of Indifference. The argument for Probabilism relied on our account of epistemic value and the law of decision theory called Dominance; the argument for the Principal Principle relied on the same account of epistemic value, but it used Chance Dominance in place of Dominance. Both of those decision-theoretic principles are intuitively compelling. In contrast, the principle on which the argument for the Principle of Indifference turns is very controversial. It is the Maximin principle (sometimes called the Minimax principle): it says that we should choose the option whose worst-case outcome is best. So, if I’m choosing between actions, I look at the worst-case scenario for each of them and I pick the action whose worst-case scenario is best for me. This is a maximally risk-averse strategy. Indeed, it’s so risk-averse that even Lara Buchak’s decision theory, which is designed specifically to accommodate risk-averse choices, rules it out as too extreme. In the credal case, Minimax says: we should choose the credence function whose worst-case accuracy is best. So we line up all the possible credence functions, write down the minimum accuracy they attain, and pick the one whose minimum accuracy is greatest. It will turn out that this is always the uniform distribution, i.e., the credence function that assigns equal credence to all possibilities. Credence functions that assign more probabilities to some possibilities than others will have worse worst-cases than the uniform distribution; adopting them will constitute a greater epistemic risk.

3:AM:So how should I plan to update my credences upon receipt of new evidence according to your theory so that I maximize my rationality?

RP:Jim Joyce’s accuracy-first argument for Probabilism launched accuracy-first epistemology — Probabilism is perhaps the central tenet of Bayesian epistemology, so Jim’s argument not only gave us the tools of accuracy-first epistemology, but it was also a sort of proof of concept; if you can’t justify Probabilism (or one of its rivals), then your credal epistemology is off to a bad start; Jim showed that accuracy-first epistemology can. Then, six years later, Hilary Greaves and David Wallace gave an accuracy-first argument for the second tenet of Bayesian epistemology, namely, Conditionalization.

Conditionalization covers those situations where you learn a proposition with certainty. Some people — David Lewis, for instance — have thought that this covers all learning experiences; others — such as Richard Jeffrey — have thought it covers none! In any case, Conditionalization says that you should proceed as follows: Suppose I know you’ve picked a number below 5. I have credences in all of the four possibilities: your number is 1, your number is 2, and so on. Now suppose I ask you whether the number is even or odd, and you tell me it’s odd. Then I take all of the credence that I’d assigned to the possibilities on which its even, and I redistribute this over the possibilities on which your number is odd; and I do this in proportion to the credence I had in each of those possibilities before I received this evidence. So, for instance, suppose I was 50% confident you had picked 1, 20% confident you’d picked 2, 25% confident you’d picked 3, and 5% confident you’d picked 4. Then you tell me you picked an odd number. Then I remove the 20% credence from 2 and the 5% credence from 4. This gives me 25% of credence to redistribute over 1 and 3. I want to do that in proportion to how confident I was in those possibilities to begin with. Since I was twice as confident in 1 as in 3, I give twice as much to 1 as I give to 3. So my new credence in 1 is 66%, while my new credence in 3 is 33%. Now, Greaves and Wallace are concerned with what you should plan to do when you know that you will learn one of some set of mutually exclusive and exhaustive propositions, as you might do when you are running an experiment and you know what the possible outcomes of it are. And they say you should plan to update by Conditionalization. And they show that doing so uniquely maximises your expected epistemic value.

More recently, R. A. Briggs and I have given an alternative argument for planning to update by Conditionalization. The Greaves and Wallace result takes you to have fixed prior credences and uses those to evaluate the different possible updating plans you might adopt. Ray and I don’t assume that your priors or your updating plan are fixed. And we show that if you were to have a prior together with an updating plan that isn’t Conditionalization on that prior, then there is an alternative prior and an alternative updating plan such that adopting that latter pair instead of your own pair is guaranteed to do better for you, epistemically speaking.

3:AM:Can you say what you mean when you say that all this justifies (in a sense) veritism in a way similar to the kind of justification that a hedonistic utilitarian might use in ethics? Is this then a kind of utilitarian epistemology?

RP:Yes, I think it’s useful to think of accuracy-first epistemology as something of an analogue of hedonic utilitarianism in epistemology. As with all analogies, it isn’t perfect, but it’s certainly close enough to be helpful. And indeed drawing on that analogy has created some very fruitful discussion, including Hilary Greaves’ epistemic version of Philippa Foot’s and Judith Jarvis Thompson’s trolley problem in ethics, and Jennifer Carr’s epistemic version of population ethics. One of the key parallels between the two theories is the one you mention. There are various objections to utilitarianism that run along the following sort of lines: appealing to aggregate utility alone cannot account for certain moral judgments we make; we can only recover those judgments by talking about rights or obligations or something thoroughly deontological, perhaps in conjunction with aggregate utility; therefore, pure utilitarianism is wrong. And there’s a parallel objection to accuracy-first epistemology and its close cousins: appealing to the accuracy of beliefs and credences alone cannot account for certain epistemic judgments we make; we can only recover those judgments by talking about evidence or epistemic duties or something like that, perhaps in conjunction with talk of accuracy; therefore, pure accuracy-first epistemology is wrong. One of things that really motivated me to write the book was to answer these sorts of objection. In particular, I was keen to show that laws like the Principal Principle, the Principle of Indifference, and Conditionalization, which are often taken to be best justified by talking about your obligations to your evidence, can be justified by appealing to accuracy alone.

3:AM:What are the main differences in your approach to credal principles to standard alternative approaches? Why do you think your approach is superior?

RP:So I suppose the main alternatives are the pragmatic arguments for credal principles, such as Ramsey’s and de Finetti’s sure loss argument sketched above for Probabilism, and the evidentialist arguments for credal principles, such as the sort of project that Jeff Paris and Alena Vencovska have been engaged in for a number of years. I don’t actually think it’s necessary to set accuracy-first epistemology up as a rival to either of those, though I think I do sometimes speak that way in the book. You might think, for instance, that there are just three different roles that credences play: they guide our actions, they reflect our evidence, and they represent the world. Pragmatic arguments pin down what they must be like to play the first role; evidentialist arguments say how they must be in order to play the second role, and accuracy-first arguments delimit the properties that credences must have in order to play the third role. So, in a way, you might see them as mutually supporting, rather than as rivals. I rather like that view — it appeals to my view of philosophy as a collaborative discipline.

3:AM: You’re currently working on decision making and in particular the problem of how we decide something knowing we’re going to be changed in the future. First, can you sketch what the issue is and why it sets up problems for conventional decision making theory?

RP:The new book is currently called Choosing for Changing Selves. I was inspired to write it after reading Laurie Paul’s Transformative Experiencebook and Edna Ullmann-Margalit’s ‘Big Decisions’ paper, both wonderful pieces of philosophy. The idea is this: Our values change over our lifetimes. Sometimes this just happens organically — often, people see their political values drift right as they get older; many gain a desire for the status quo. Sometimes it happens as a direct result of a decision we make — there’s fascinating evidence that people ‘socialise’ their values; that is, they shift their values so that they better fit with their situation, so that someone who values self-direction to begin with will likely come to value it less and value conformity more if they embark on a career as a police officer.

But when we choose how to act, decision theory tells us to consult our beliefs (our credences) and our values (our utilities). Now, if those values change, or might change as a result of my decision, it isn’t clear to which utilities I should appeal when I am choosing: my current ones, or my futures ones? Perhaps even my past ones? Or perhaps no one self’s values, but rather some amalgamation of them all? So, for instance, take an example that is central in Ullmann-Margalit’s paper and in Paul’s book: you’re choosing whether or not to become a parent. You think it’s at least possible that, if you do become a parent, your values will change, and possibly quite dramatically. Should you pay attention to your current values or to these possible future values when you’re deciding? This particular question is very personal to me. For about a decade, I’ve been trying to decide whether or not to adopt a child. It’s been a very difficult decision to make, and I’ve found it extraordinarily hard to think through the choice systematically — exactly as Ullmann-Margalit and Paul predict. I think I’ve now decided that I won’t. But I can’t promise that I made that decision in line with the theory I’m setting out in the book.

3:AM:So how do you propose we should deal with decision making when a person’s values might change in the future? Do we have to amalgamate past, present and future values and how do we distribute the weights of these in such decision making?

RP:In the first half of the book, I argue for a general framework in which we must answer this question. The standard approach to choice in decision theory runs like this: you specify the actions you could take and the ways the world could be; you specify how much you value having performed a particular action when the world is a given way; and you specify how likely you take the world to be a particular way given that you perform a particular action. Then you use these to give your expected utility for each action: this is a weighted average of your utilities for having performed that action at each of the ways the world could be, where the weights are given by your credences about how the world is on the supposition that you perform the action. The problem that Ullmann-Margalit and Paul discuss is that it isn’t clear how to set these utilities for having performed an action at each of the ways the world could be: should these reflect just your present values, or should they reflect your future values or your past values or some amalgamation of them all? In the first half of the book, I argue that we should treat this as a judgment aggregation problem. That is, we should treat my different selves with their different values much as we treat different people in a society whose values and preferences we wish to aggregate in order to determine policy for that society. In particular, I argue that your utility for having performed an action and the world being a particular way is a weighted average of the different utilities of your past, present, and future selves for that outcome.

But this leaves open the question: how do you set the weights for that weighted average? Should my past selves get any weight at all? Should the weights for my future selves diminish as we move further into the future? Should I give any weight at all to values that I find morally impermissible at the present time? Should I give more weight to past and future values that are closest to my current values? Should the weight I give to a future self depend in part at least on the way in which that future self forms their values? I discuss all of these questions and consider the arguments on either side.

3:AM:Does the way we operate in this context change if we don’t depend on utility theory?

RP:I certainly don’t think the problem will go away if we move from standard utility theory. Problems of this sort will affect any theory where the decision you should make is determined in part by your values. Such a theory will always have to answer the question: which values? which selves gets to fix them? But I do think the problem is interestingly different for other theories of rational choice. Take Lara Buchak’s risk-weighted expected utility theory, for instance. According to this, what it’s rational for you to choose is not just determined by your credences and utilities, but also by your attitudes to risk. But, just as your utilities can change over time, so can your attitudes to risk — I am pretty sure I am more risk-averse now than when I was a child, which is saying something. So now we have to find a way to aggregate not only the utilities of your past, present, and future selves, but also the attitudes to risks that they hold.

3:AM:And for the readers are there five books you could recommend to take us further into your philosophical world?

RP:

Lara Buchak’s Risk and Rationality— not only an elegant and plausible new decision theory, but some brilliant discussions of the nature and purpose of decision theory; highlights are the discussion of objections at the end.

Alvin Goldman’s Knowledge in a Social World— after the Choosing book, I’d like to look into accuracy-first social epistemology, and Goldman has done some wonderful work here.

Sarah Moss’ Probabilistic Knowledge— this is a groundbreaking book that I think will form the basis of many discussions in formal epistemology for many years to come; also, a model of how to write a philosophical book.

Frank P. Ramsey’s Philosophical Papers(edited by Hugh Mellor) — before I became a philosopher, I studied for a PhD in Mathematical Logic and I studied Ramsey Theory; the way his mind worked fascinates me; and, in ‘Truth and Probability’, he laid the foundations for much of how we now think about credences and probabilities.

Amartya Sen’s Collective Welfare and Social Choice— this is another model of how to write a philosophical book; and the insights are remarkable; for me, the highlight is the Liberal Paradox.

[Photo: So Young Lee]

ABOUT THE INTERVIEWER

Richard Marshallis still biding his time.

Buy his new book hereor his first book hereto keep him biding!