Causal

Interview by Richard Marshall.

'Causal concepts that used to be vague, intuitive, or subject to ambiguity can now be formulated in mathematically precise terms: We can clearly distinguish between what it means to probabilistically condition on an event, and intervening to bring about that event, and we can specify when the two cases agree in their predictions. '

'Our best scientific theories do not contain an explicit account of causation, or at least not of the type of causation that we apply to medium sized dry goods. If these theories can be taken as indicators of what a completed physics may look like, then causality will have to take on a very different form. '

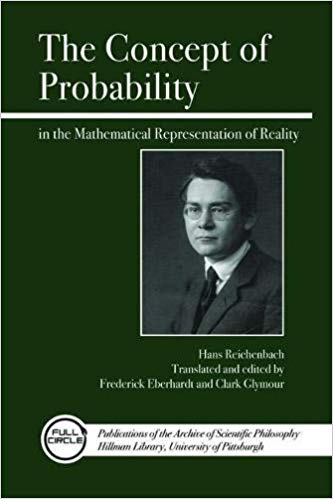

'Reichenbach did not adopt the rather fast-moving mathematization of probability after the publication of the Kolmogorov axioms and after the various technical difficulties for the limiting frequency interpretation of probability had been pointed out by e.g. Alonzo Church concerning the notion of randomness. And on causality Reichenbach was a little bit too far ahead of the times with his Principle of Common Cause and his first attempts at graphical models. Clark Glymour, as a student of Wesley Salmon’s, who was a student of Reichenbach’s, will know the historical influences better, but I think it is fair to say that Reichenbach’s ideas concerning causality were exactly on the right track as precursors to what we now have as the framework of causal graphical models.'

'We were motivated to investigate this because a colleague of ours had gigabytes of video of fruit flies walking around in a “Petri” dish. Krzysztof turned up on my doorstep one day saying that he had been told that I was the new hire at Caltech and knew something about causality. He wanted to know what made the flies in the Petri dish stop in their tracks. -- Well, what does a fly care about? What are the candidate causes?'

'I have always found it peculiar that “the gold standard” for a causal relation is the experiment, or even better, a randomized controlled trial. '

'My thinking is not Bayesian. In fact, years ago, together with David Danks, I wrote a paper arguing that several experiments in cognitive psychology that purported to show evidence of Bayesian reasoning in humans, showed no such thing, or only under very bizarre additional assumptions. It was not a popular paper among Bayesian cognitive scientists.'

'Books are an outdated form of passing along and developing new ideas. They are – generally -- not easily searchable and access to them is (again generally) even more restricted than to journal papers. Unfortunately, parts of philosophy and many other disciplines in the humanities still think books are a good standard of academic excellence relevant to promotion and tenure.'

Frederick Eberhardt is interested in the formal aspects of the philosophy of science, machine learning in statistics and computer science, and learning and modeling in psychology and cognitive science. His work has focused primarily on methods for causal discovery from statistical data, the use of experiments in causal discovery, the integration of causal inferences from different data sets, and the philosophical issues at the foundations of causality and probability. Eberhardt has done work on computational models in cognitive science and historical work on the philosophy of Hans Reichenbach, especially his frequentist interpretation of probability. Here he discusses what we know about causality, Hume's problem, Reichenbach's struggle with thew relationship between probability and causality, coordination in Reichenbach, understanding climate change, modelling El Nino and La Nina weather systems, the difference between a cause and a correlation, causal discovery, understanding human emotions, the relationship between micro and macro levels, the Causal Coarsening Theorem, the problem with experiments and randomised controlled trials, facing down AI anxieties, on not being a Bayesian, causal Bayes nets and the need for philosophy to continue to engage with substantive scientific and theoretical literature.

3:AM: What made you become a philosopher?

Frederick Eberhardt: High School: Frau Horwitz was a demanding, no-nonsense philosophy teacher, whose exams were entirely predictable and yet very hard (Q1: What is the position of the philosopher in this article? Q2: How is this different from the positions that other philosophers have held on this topic? Q3: Is the author right?). She instilled the expectation that there are objective standards to judge the quality of any purported answer, even when the questions were not formal.

At the time, one had to wait until high school (the last three years) until one could replace Religion class with Philosophy class, and that was if one was lucky and the school offered the choice. Philosophy seemed to be frowned upon, and not really considered to be part of a good education. In Germany, to this day, Religion is the only school class required by the constitution . I don’t know what Goethe, Bach and Gauss did wrong to not get their foot in the door. For whatever reason, however, German Religion classes often involve more discussion of questions of life than doctrine, which means that enlightened German teacher friends of mine take offense when I complain about a lack of separation between church and state in Germany. (In their defense, of sorts, a colleague of mine here quips that the best way to keep a church in check is to have a state church. – And yes, I have pointed out counterexamples to that.)

In contrast, in the East of the just recently re-united Germany of the time, “Ethik” was and had been a standard high school subject. I remember envying the “Ethik” classes in the Eastern part of re-united Germany, but whether in fact “Ethik” in the former East Germany was any better than Religion class in the former West, I don’t actually know. These days, when German kids can no longer be easily divided into Protestant and Catholic religion classes, there seem to be broader offerings of philosophy (or similar named subjects) from middle school onwards. That sounds like the right route to me.

So when the psychologist Howard Gardner recently argued in an article in the Chroniclethat we should make everyone take one philosophy course at the beginning of college and one at the end , I’d go a step further: Philosophy should be taught at high school across the board – it is a basic skill, an exploration of possible thought and fundamental principles, a recognition of the limits of reasoning and a training in how to ask the right question. Such skills are as essential to navigating life as writing, communication and mathematics are.

In any case, at the end of high school I finally got a taste of philosophy. I do not recall much detail of what we did in philosophy class except that we also read the diaries of J. R. Oppenheimer. They impressed me quite a bit, even though my interests in subsequently pursuing philosophy had very little to do with the conflict between ethics and science. Instead, maths and physics were my main focus. I distinctly remember the disappointment with the idealizations of high school physics and the lack of discussion of what I took to be the fundamental questions. Looking back, I regret not having pursued physics, or the sciences in general, further. But I am aware of the causes that were working on my mind at the time, and so I am rather confident that if I had studied physics, I would have regretted not doing more philosophy. (Looks to me like causation is driving the counterfactual and not vice versa.)

There is a separate answer to your question that is not about the seed that was planted, but about the people and environment that nurtured my philosophical interests. The Philosophy Department at Carnegie Mellon had a profound effect on my thinking. Their open doors, their environment of collaboration, their refusal to respect disciplinary boundaries and their relentless demand to work out ideas formally, set the standard for me to this day. Philosophy was viewed as a generator of new fields of study and as a contributor to existing ones. And, as one ought to expect of any well-designed graduate program in any discipline, they exemplified that a PhD in philosophy could lead to successful careers in other disciplines and endeavors, as well as to professorships in philosophy.

3:AM: Philosophers (and scientists) have long been interested in the causes of mood swings and weather systems and whether drinking wine causes heart disease and other complex phenomena. How would you summarise the state of play regarding our current understanding of causality – and is the philosophical question setting up the right calculations or wondering why the calculations work as explanations?

FE: I think we now have a fairly good account of causality as it pertains to the phenomena that you mention, even if we do not yet know all the causes and effects at work. The understanding is largely due to the development of two inter-translatable frameworks, the causal graphical models framework and the potential outcomes framework, developed by a now quite large group of philosophers, computer scientists and statisticians. Neither of these frameworks provides necessary and sufficient conditions for what it is to be a cause, but instead they both attempt to provide almost axiomatic accounts that link three superficially quite disparate domains that are nevertheless essential to our understanding causation: 1) probability, as a description of the observed events, 2) experimental interventions that will change the events in ways that are not captured by the observational probability over the events, and 3) counterfactual statements about the observed events.

It is useful to think of analogies here: The Kolmogorov Axioms do not provide a definition of probability, yet they are extraordinarily useful to the application of probability. Similarly, the mathematics of quantum mechanics allows us to make accurate predictions about what will happen in quantum experiments, even though the interpretation of the mathematics is far from resolved. The frameworks I mention for causality address causal questions in a similar vein.

Within the framework of causal graphical models there is now a reasonably comprehensive theory for a variety of different background assumptions about what can and cannot be discovered about the causal relations underlying observed events. The result is an understanding of causality that is scientifically useful. Inference methods that have been developed on the basis of this theory are applied in practice in many disciplines, including medicine, genetics, neuroscience, economics, social science and climate science.

Causal concepts that used to be vague, intuitive, or subject to ambiguity can now be formulated in mathematically precise terms: We can clearly distinguish between what it means to probabilistically condition on an event, and intervening to bring about that event, and we can specify when the two cases agree in their predictions. Or take the counterfactual question: What is the outcome that an intervention would have resulted in, given that in fact we observed some specific event actually occurring? We can now mathematically describe the quantity that needs to be estimated to address this question. Judea Pearl, one of the big contributors to this framework, often recalls how questions like these used to flummox his audience, not because they could not make sense of the question, but because they lacked the framework to represent the questions precisely enough to turn them into ones that can be empirically addressed.

Having said that, there remain many challenges, not just of a computational or statistical nature (of which there are a truck load already). We need to better understand how to think about causation in dynamical systems where both a variable and its time derivatives might operate as causes. In what sense can such variables be separate causes? How might we discover them from data? Many scientific domains use dynamical equations to model their system of interest and our tool boxes are not yet well integrated.

We also need to understand how it is possible to give causal accounts of a system at multiple levels of analysis. Philosophers in metaphysics will be familiar with this problem in terms of the “exclusion problem” in mental causation where psychological and neural events appear to be in competition as candidate causes of behavior. We need to turn the philosophical advances that exist on this problem into tools that are applicable to scientific domains. How do we formally approach scenarios in which causal variables supervene on a space of finer (causal?) variables? Can we discover multi-scale causal relations?

Another question concerns the interaction between causal relations and logical relations. This problem as well, is not foreign to the philosophical literature: does Socrates’ death cause Xanthippe to become a widow? This may standardly be treated as a question concerning the metaphysics of causation. But in times of big data, this question is an epistemological one about the inferences one can draw about the causes and effects between objects and individuals that are stored in large relational databases. To make progress on this question we need an account of how to think about the interaction between logical or conceptual, and causal relations.

There are, of course, still many other causal questions that have a justified demand for the attention from philosophers, and it is not as if philosophers (and others) have not started looking at the questions I mention, and in some cases already made substantive contributions towards answers. But unlike other aspects of causality, I think we are still only at the beginning of an understanding that is scientifically useful on these three topics.

3:AM: Hume famously argued that causal knowledge isn’t a priori and that it can’t be directly observed. He’s been taken to conclude that objective causality is dubious and that our inability to think without the concept of causality is just a quirk of our mind rather than anything about the world itself. Knowing a reason for anything is seriously in jeopardy if this kind of thinking goes through. So how do you respond to the Humean challenge? Are you a realist or anti-realist about causality – accepting that both can’t help but believe in causality.

FE: I don’t know the answer to this question. I do think that Hume’s demands for the justification of the existence of any objective causal claim were too high. Such demands would speak against any kind of unobservable scientific quantity. But pointing this out does not settle the question you pose.

Our best scientific theories do not contain an explicit account of causation, or at least not of the type of causation that we apply to medium sized dry goods. If these theories can be taken as indicators of what a completed physics may look like, then causality will have to take on a very different form. For example, the observed phenomena in the Einstein-Podolski-Rosen experiment are straightforwardly accounted for in quantum mechanics. But a causal analysis of the experiment leads to an apparent violation of the causal Markov condition, perhaps one of the most central causal axioms that encodes Reichenbach’s idea of “screening off”. Nevertheless, as Glymour points out in Markov Properties and Quantum Experiments, both the set-up and interpretation of the EPR-experiment are riddled with causal assumptions akin to the Markov condition. The pieces don’t fit together and something has to give, but there is disagreement about what needs to be revised.

The ability to predict what will happen when a system is subject to intervention, strikes me as one of the main reasons why one would care about a causal model of a system. On this picture, the intervention introduces an exogenous influence into the system that an agent may have some control over. It may well turn out that in a model of a completed physics such an exogenous influence (with or without control), makes no sense. In that case I would have to accept that a causal model is a crude description that says more about how I think about the world than how the world actually is. That sounds like an anti-realist view. Nevertheless, all my behavior, both in my written work and my daily life, is consistent with van Fraassen’s description of a realist (about causation). So, I don’t have a conclusive answer to Hume’s dilemma.

3:AM: Hans Reichenbach’s approach to inductive reasoning was ‘fiercely empirical’, provoked by a conflict between neo-Kantian a priorism and Einsteinian relativity. Can you sketch for us his approach to causality and probability and say what makes it distinctive – and is it any good?

FE: Reichenbach struggled with the relation between causality and probability. Let’s be a little anachronistic: Reichenbach is known for his development of probability as the limiting frequency in an infinite sequence of < blank > trials. I say “blank”, because it is rather tricky to figure out what exactly he wanted to fill in the blank. Of course, one might say “random” or “independent and identically distributed” or – his term—“normal” sequences, but this just replaces the problem with terms that need to be explained themselves, and Reichenbach was aware of some of the technical challenges that had been raised about candidate proposals. In his Theory of Probability, it is clear that, whatever these “normal sequences” were, Reichenbach did not want to ground probability on causal notions. Why? Because at that point he took the view that causality is inferred from probabilistic relations, and not directly observable or known a priori. In the Direction of Time he then develops the connection between causality and probability in terms of his notion of “screening off”, e.g. that a cause screens off its effects from any prior causal history. This “screening off” relation transfers to the observed probabilities. Consequently, probabilistic independence relations that one may find in measurement data can provide insight about the underlying causal structure. He formalized these ideas in terms of the Principle of Common cause, which in many ways is a precursor of the causal Markov condition, which is central to the framework of causal graphical models. (Unfortunately, Reichenbach did not get his screening off relations quite right – in The Direction of Time he incorrectly discusses what happens when one conditions on a common effect. He makes the mistake most people make when first thinking about the Monty Hall problem; namely, given the door that Monty opens, it doesn’t matter whether one switches doors or stays with the one already chosen – but it does matter!). In any case, in the second half of his life Reichenbach viewed probabilistic notions to be more fundamental than causal ones.

This is almost a complete reversal to his doctoral thesis, in which he started from a neo-Kantian view that causal knowledge is synthetic a priori. His reasoning fit the thinking of the time. In his 1915 thesis he provided a transcendental argument to show that knowledge of the existence of a limit of the relative frequencies in a sequence of causallyindependent trials (whatever that means) is also synthetic a priori. Because if not, then we wouldn’t have any scientific knowledge. Kant’s synthetic a priori knowledge of causality only gives us knowledge about the causal relations among individual events. But scientific knowledge is about populations of events. We only ever see a finite sequence of instances of these events. If we could not guarantee that this sequence, when extended ad infinitum, has limiting frequencies, then there is no reason to think we could obtain claims about populations of events. But we do have successful scientific theories making claims about populations of events. So, knowledge of the existence of such a limit must be synthetic a priori.

I should have read his thesis earlier and maybe I would have written a bolder PhD thesis than I did... In any case, what I think of Reichenbach’s thesis argument is written up in the introduction to the translation of the thesis that Clark Glymour and I published with OpenCourt (that was a fun project, especially Clark’s German, which he spoke more loudly when I did not understand).

In papers after his 1915 thesis and before The Theory of Probability in 1935 (1949 in English), Reichenbach oscillated back and forth, trying to grapple with the pressure on all things synthetic a priori, coming from Einstein’s theory of relativity. He struggled to preserve scientifically workable and philosophically justified concepts of probability and causality.

My best effort at making sense of Reichenbach’s account of probability logic is in a paper with Clark Glymour that unfortunately disappeared into one of these collections no-one reads. I am still rather pleased with that paper, since I think we made a genuine and concerted effort to try to understand Reichenbach’s view, even though we ultimately conclude that it fails. Reichenbach did not adopt the rather fast-moving mathematization of probability after the publication of the Kolmogorov axioms and after the various technical difficulties for the limiting frequency interpretation of probability had been pointed out by e.g. Alonzo Church concerning the notion of randomness. And on causality Reichenbach was a little bit too far ahead of the times with his Principle of Common Cause and his first attempts at graphical models. Clark Glymour, as a student of Wesley Salmon’s, who was a student of Reichenbach’s, will know the historical influences better, but I think it is fair to say that Reichenbach’s ideas concerning causality were exactly on the right track as precursors to what we now have as the framework of causal graphical models.

3:AM: He had views on metaphysics and epistemology – was he a pragmatist and a realist about the external world – are you sympathetic to his views or do your own views on causality, probability and the like suggest a different metaphysics and epistemology to his?

FE: There is an enormous amount one could say here and I have tried to make some sense of Reichenbach’s metaphysics and epistemology in the SEP article on Reichenbach. I still agree with what we said there. I will only pick out Reichenbach’s concept of “coordination” here in the hope that it piques someone’s interest. Coordination, for Reichenbach, is the process of alignment between events in the world and descriptions of those events in language. Reichenbach was concerned that this alignment proceed successfully, in order for scientific theories, which are couched in language, to reflect events and the structure of the world. Moreover, for Reichenbach this alignment was the bottleneck for scientific inference, since it limits our access to the external world: our scientific basis is sensory data, any access to such relations like causality, that are not directly observed, is inferential. This is where Reichenbach’s fiercely empirical pragmatism kicks in. But he was not a positivist or an instrumentalist. His views had realist aspects to them.

I find this notion and process of “coordination” highly intriguing, because – even if Reichenbach never really spelled it out in any detail – it indicates that he clearly recognized the modern challenge of AI: How am I going to build a robot laden with sensors such that it can parse its sensory input in a way that is scientifically useful? This is not modern data analysis, but the question of data construction in the first place. You might say, this was Locke’s problem long before. Sure. But it was Reichenbach’s too. And it may well be worth your time to make it yours.

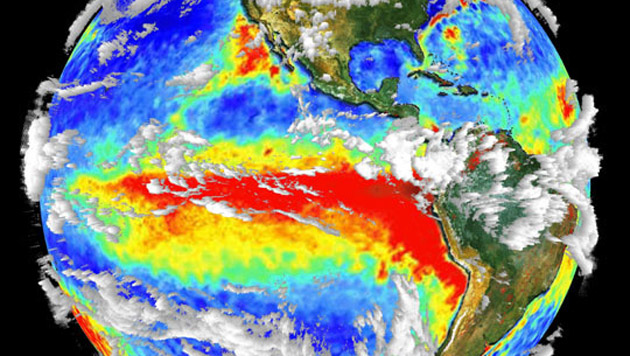

3:AM: Climate is often held to be a very difficult thing to understand and predict and aspects of climate are subjected to chaotic dynamics. You have recently written about applying what you call a ‘causal learning framework’ to help learn causal mechanisms involved in weather systems. So can you explain to us what this framework is and how it works?

FE: Together with Krzysztof Chalupka and Pietro Perona I tried to make some headway into the problem of variable construction, similar to the challenge of “coordination” in the previous question. We wanted to have a principled way of identifying causal macro variables. Standard causal discovery methods take as input a data set of measurements over a set of well-defined causal variables, and the task is to identify the causal relations among those variables under a given set of background assumptions. But what if you don’t know what the relevant causal variables are in your system? Can any measured quantity be considered a causal variable? More generally: Can there be causal descriptions of the same system at multiple levels of analysis? Or is there only one correct level of causal description?

We were motivated to investigate this because a colleague of ours had gigabytes of video of fruit flies walking around in a “Petri” dish. Krzysztof turned up on my doorstep one day saying that he had been told that I was the new hire at Caltech and knew something about causality. He wanted to know what made the flies in the Petri dish stop in their tracks. -- Well, what does a fly care about? What are the candidate causes? All we had was oodles of high-resolution video of flies marching about, and I knew of no causal discovery method suitable for the problem. So we started developing a theory that could take low level measurement data as input and infer (or construct, depending on how you view it) causal variables that would support an interventionist interpretation of causation, i.e. the output variables would be such that if they are subject to intervention, then they would have a well-defined causal effect.

The theory was entirely domain general, so we also started looking for examples of application in climate science.

3:AM: And in applying it to El Nino and La Nina weather systems, has it proved successful?

FE: Yes, I think so. We were looking for a relatively simple real-world proof of concept of our theory. Climate scientists define El Nino and La Nina as a specific divergence of the mean sea-surface temperature in a rectangular area of the equatorial Pacific. But of course that specific rectangle is at best an indicator of the macro-level climate phenomenon, not the actual cause that we mean when we say that El Nino caused rain in California or that it had an effect on the economy of South East Asia.

We wanted to get a better grip on what El Nino actually is. The Causal Feature Learning method we had developed takes as input a grid of fine-grained sea surface temperature and the wind speed measurements over a large geographical region of the equatorial Pacific collected over several decades, and returns a macro-level description of this system that contains both El Nino and La Nina as two macro level states, distinct from other macro-level climate states in that region. These are not neat geographical rectangles, but still mathematically well-defined macro states of the sea surface temperature. There are details about the exact causal interpretation that can be given to our results, but we were very excited that we could construct, in an unsupervised manner (i.e. without knowledge of the target macro-level), causal variables that supervened on low-level measures. As it currently stands, this is not a contribution to climate science yet, but my hope is that it provides a basis for thinking clearly about multi-level causal descriptions.

3:AM: Is cognitive psychology really a natural science given that it can’t be studied using many standard experimental techniques found in natural sciences?

FE: I bristle at the implied sharp disciplinary distinctions and purported consensus standards within other disciplines, quite apart from the evaluative connotations of this question.

3:AM: Fair enough! I shall move on speedily! What makes something a cause rather than just a correlation? Do we know what the necessary and sufficient assumptions are for causal discovery in most settings?

FE: Two quite different questions, and just in case there is a lazy reader (God forbid!), let me emphasize the difference: The second question is about causal discovery, not about the definition of a cause.

Ok, with that aside, let's look at the second question: Correlations are symmetric, causes (and effects) are generally not. Causation is generally considered to be time ordered, correlation need not be. Causal relations can be used to predict what happens under intervention, correlations are ambiguous with regard to what they predict under intervention: if it were true that drinking red wine in moderation is positively correlated with heart disease, it would not follow that making everyone drink a glass of red wine would improve heart-disease; the correlation may be due to a common cause. Of course, there may be causal relations that one cannot intervene on; in some cases, intervention is perhaps even impossible – we can discuss those cases separately.

On the second question: I think we are in very good shape these days. We have a very good understanding for many, many sets of background assumptions, about what can and what cannot be discovered about the underlying causal relations. Of course, in “most” cases we may just not have enough data. Rather than trying to list examples here, I have in the not too distant past tried to give an impression of the variety of necessary and sufficient assumptions for causal discovery in a short paper. The interested reader can take a look. I have tried to make it accessible.

3:AM: You’ve recently outlined a new research program for investigating human emotions. This involves a novel technique and a novel analysis. Can you sketch for us what the novelties involve and what it reveals about human emotions?

FE: This is a very exciting collaboration with my neuroscience colleagues Julien Dubois and Ralph Adolphs at Caltech. Here is only a very brief outline: In cases of severe epilepsy, patients are implanted with electrodes to locate the sources of their seizures. After many years of modeling and testing work, a team at the University of Iowa collaborating with the Adolphs lab received IRB approval to not only record from these electrodes, but also stimulate the brain areas these electrodes are in. Even more amazingly: Concurrently with the stimulations, they are permitted to perform functional magnetic resonance imaging. That is, at some level, we can explore the effect of the stimulation across the whole brain. For me this is very exciting because of course we can obtain a fair amount of imaging data prior to the stimulation, and using the techniques for causal discovery from observational data, attempt to get at least some insight about what the causal relations among the brain areas are. The stimulation can then be used to test some of the causal hypotheses we get from the observational data.

Prior to this collaboration, I had felt a bit like a fraud because I had worked on the theory of causal discovery for years and not got my hands dirty with real data. Well, those were the good old days. We are struggling with all the challenges of the indirect measurement of fMRI that massively “smudges” the neural activity in time and space, we don’t know what the correct “variables” are in the brain, our sample sizes are too low, our computers do not have enough RAM for the causal search etc. BUT, the hope is that as we slowly gain traction on this, we may be able to start tracing (and verifying!) the causal connections between brain areas that give rise to particular mental states, such as emotions.

3:AM: A key to much work on causation is this relationship between micro and macro levels, such as gene sequencing being used to predict macro-level states of the body, or as in the weather system example you discussed earlier where micro-level data discovers what drives the macro level features of a weather system. Does this mean that your understanding of multi-level causality can explain how physics causes biology, or in principle how physics can cause poetry or economics?

FE: Yes, and I like your proposal: the physics of poetry, my next book! However, I object to the causal relation that you introduce between physics and poetry: Physics does not cause poetry; in this context, that is a category mistake that sounds somewhat similar to the claim that the kinetic energy of the particles causes the temperature. Let me stick to economics, since it will have fewer associations with being inexplicable than poetry (at least that is what my colleagues in economics say). Economics supervenes on the interactions of a bunch of agents, who themselves supervene on their physical bases. The theory I developed together with Krzysztof Chalupka aims to give an account of how it is possible that there might be causal descriptions of one and the same system both in terms of its physical basis and in terms of macro-economic variables (in this setting). The theory is mathematical and makes no commitment about what exactly the metaphysical relations are, but (at least in this example) it would be a mistake to call them causal, since various counterfactual and interventional constraints cannot be satisfied. The theory comes with a method that – at least in principle – allows you to take the microlevel description and check whether it indeed can be aggregated into a macrolevel causal description. That is to say, in principle, if we could measure all the interactions among our agents, we could empirically investigate whether our macro-economic descriptions are correct, or almost correct, or need to be revised. The driving principle is that if there indeed is a macrolevel causal quantity, then it should be detectable by an “unsupervised” method from the microlevel description of the system, that is, it should be detectable by a method that does not already contain a pre-defined macrolevel representation.

3:AM: What’s the Causal Coarsening Theorem designed to do, and how does it help us understand visually driven behaviour in humans?

FE: Very roughly, the Causal Coarsening Theorem states that if indeed phenomena in the world are driven by macro-level causes (rather than that everything has to be analyzed at the finest level), then, under relatively weak assumptions, we can detect these macrolevel causes in observational data: all the changes in the variables that are relevant to give an account of the observational data over the variables, are in fact a superset of the changes in the variables that are causally relevant. So, the causal description of a system is, with respect to the value space of the variables, coarser than the observational description of a system. The theorem is a mathematical theorem with a measure theoretic guarantee. But let me give an example of what it captures: A barometer is a good predictor of the weather tomorrow. Observationally, many distinctions that the barometer makes are relevant to my prediction of the weather. But the barometer is not causal of the weather tomorrow: No matter how much I move the needle, the weather remains sunny in Southern California. So, there is no relevant distinction in the values of the barometer that is causal. Causally, the barometer has only “one” state, namely: no effect on the weather. The Causal Coarsening Theorem generalizes this coarsening relation to more complicated variable relations that may involve both confounding and causal effects. The case of human vision was one example we used in our first paper on the theory.

3:AM: Why don’t you think that experiments such as randomised controlled trials can independently provide a gold standard for causal discovery? Why do assumptions about underlying causal structures need to be already in place? Doesn’t this undermine many kinds of examination and test theories in education, for example?

FE: I have always found it peculiar that “the gold standard” for a causal relation is the experiment, or even better, a randomized controlled trial. Anyone who has done any experiments will know what a mess experiments are: low sample size; did I successfully intervene on the target; did my randomization give me a result that is correlated with a confounder; etc. In contrast, observational data generally comes in much larger quantities, is often measured much more easily and the data is obtained generally in a more natural environment. Of course, you have the problem of confounding and changes of background conditions etc, but it just isn’t obvious to me why that is so much worse.

Inspection of the assumptions required for causal inference in the experimental vs. observational case shows this as well. For philosophers, perhaps the most familiar account of an experimental intervention is Woodward’s definition of an intervention in Making Things Happen. Take a look at it! (p.98) I think the definition specifies the correct set of assumptions. But just think for a moment whether you would rather bet on all of those assumptions holding and have 100 samples, or take observational measures and have 100,000 samples. I think it depends. But paying more attention to the assumptions required for causal inference in observational data could definitely improve our options for scientific investigation.

3:AM: When it comes to calculating algorithms for very complex things, such as minds for example, we might use computers to handle the calculations and so on. Is there a possible problem that might emerge whereby we end up with integrated Bayesian models and algorithmic and implementational level models for an AI, for example, which exceeds our ability to understand them? Is it possible to have systems ’working’ that we don’t understand because no human could possibly do the math, and wouldn’t that be worrying?

FE: Oh dear, the Musk & Hawking question. We already have plenty of AI systems which exceed our ability to understand them. Many people around me – non-artificial intelligences, so to speak -- also exceed my ability to understand them, and I didn’t even have a hand in constructing them. The one intelligence that I did have a part in constructing also often exceeds my ability to understand him. If full understanding were a requirement for the development or application of a new system, I don’t think we would have got to where we are. But yes, ethics and regulation boards will continue to be busy. – If we do our philosophy education right, we might even secure some more jobs for philosophers. We may even succeed in not being left to travel on spaceship B with the PR execs and management consultants.

3:AM: Are philosophers missing a trick if they don’t apply your thinking to their problems – I’m thinking of the hard problem of consciousness, ethics, freewill, aesthetics – even phenomenology and existentialism?

FE: My thinking is not Bayesian. In fact, years ago, together with David Danks, I wrote a paper arguing that several experiments in cognitive psychology that purported to show evidence of Bayesian reasoning in humans, showed no such thing, or only under very bizarre additional assumptions. It was not a popular paper among Bayesian cognitive scientists.

In causality, the work I do uses the framework of “causal Bayes nets”, which is a misnomer, since the framework can be applied with Bayesian or classical statistics. It requires a probability distribution, but the user is free to pick their interpretation of that distribution. Jiji Zhang has done some work to explore an entirely subjective Bayesian account to this framework, but my impression is that the majority of users apply whatever statistical account is convenient for their application.

The work I have done tries to develop answers to causal questions, to questions of variable definition (i.e., what does it take to have a scientific quantity of interest?) and the possibility of descriptions of a system at multiple levels of analysis (e.g. questions of supervenience). Such issues most certainly arise in the context of consciousness and free will, or mental causation in general, and of course in ethics – for example: Suppose that in an automated decision procedure for credit approval we find that race is correlated with a failure to approve. Whether or not such an automated procedure for credit approval is considered fair, may well depend on whether race was causally relevant to the decision or just correlated with the decision.

I think philosophy has a role in turning these hard, amorphous, fundamental questions into ones that can be subject, at least in part, or under a set of assumptions, to scientific investigation. There is no trick to be missed, but there is a substantive scientific and theoretical literature that has to be engaged.

3:AM: And for the readers here at 3:AM, are there five books you can recommend that will take us further into your philosophical world?

FE: Books are an outdated form of passing along and developing new ideas. They are – generally -- not easily searchable and access to them is (again generally) even more restricted than to journal papers. Unfortunately, parts of philosophy and many other disciplines in the humanities still think books are a good standard of academic excellence relevant to promotion and tenure. Please, at the very least, put all your manuscripts (book or paper) online in a searchable format on an open-access archive. I think those who are tenured have a particular obligation to do so. As for your request, start with Christopher Hitchcock’s new/revised entry in the SEP on probabilistic causal models for some background and here then are five papersthat have deeply influenced my thinking:

Janzing & Schoelkopf: Causal Inference and the Algorithmic Markov Condition

If there is such a thing as a major philosophical contribution published in computer science, this is certainly a contender, and you have probably never heard of it. While parts of this paper require a fair bit of mathematical sophistication, the central ideas can be easily understood from the remaining part. Janzing and Schoelkopf attempt to offer an account of the Markov condition, which forms a central part of the causal Bayes net framework, in terms of Kolmogorov complexity. This is a move away from a probabilistic account of causality to a more fundamental representation, for which thinking about causal claims on the individual vs. the population level are merely two different special cases of the same framework.

Ben Jantzen: Projection, Symmetry and Natural Kinds

One response to Nelson Goodman’s new riddle of induction is that one can do induction over the predicates describing natural kinds, since natural kinds exhibit the appropriate “stability” for induction that avoids the annoying grue-like flips. Well, where do I get my kinds from then? A downpour of philosophical papers about the metaphysics of kinds ensues, but everything evaporates the moment one asks which of these accounts would be scientifically useful (in the sense of this term that I have used above). Jantzen’s ideas and concrete proposals are the only proposal I am aware of that provides an account that can be applied to the discovery of kinds in a scientific domain. Unfortunately, the paper has a lengthy discussion of prior accounts of natural kinds (presumably demanded by the reviewers), which cuts short the full presentation of the new proposal. But you get the idea and then you can read Jantzen’s other papers on the topic.

Clark Glymour: Markov Properties and Quantum Experiments

Since the philosophers of physics among you presumably checked out the first time I mentioned causality, I can celebrate rather than excuse the simplicity of presentation of the Einstein-Podolski-Rosen experiment in this paper. Not having had much background in quantum mechanics, I found it to be an extremely useful illustration of what is at stake in trying to resolve the conflicts between the causal Markov condition, locality and entanglement. It gives no answers, but it describes the problem clearly. Since this paper there have been a couple of others in a similar vein, e.g. by Tyler VanderWeele and by Mark Alford.

Peter Spirtes: Variable Definition and Causal Inference

Unfortunately, this paper was presented at the Congress for Logic, Methodology and Philosophy of Science in Beijing in 2007 and then disappeared (but I found a link!). Spirtes considers in an extremely simple scenario the question of how we specify causal variables and what the effects are of mis-specifying or translating them. The paper had a profound effect on getting me to think about where our causal quantities come from and how we know that we have the correct ones, if there is a sense of “correct ones” to be had at all.

Cosma Shalizi & Cristopher Moore: What is a Macrostate? Subjective Observations and Objective Dynamics

I may be wrong, but I thought I had heard that this paper had been submitted to a respectable philosophy journal and that it had come back with a rejection and incomprehensible reviews. So much for the respectability of that philosophy journal. Shalizi & Moore develop a theoretical account of macro variables that gave me a basis to think about causal variables. They illustrate their account with a variety of useful examples of when and how (and when not, and why not) a particular quantity might be a well-defined macro variable.

ABOUT THE INTERVIEWER

Richard Marshall is biding his time.

Buy his new book here or his first book here to keep him biding!

End TimesSeries: the first 302